AI Knowledge Base: A Complete Guide for 2026 and Best Softwares

TL;DR

- An AI knowledge base uses NLP, ML, and semantic retrieval to let users ask in natural language and get relevant answers, far beyond static keyword-only search.

- 2026 marks the “retrieval-first” era, enterprises prefer systems built around embeddings, vector search, and reasoning layers (RAG/hybrid), not just keyword indexes.

- AI knowledge bases cut costs, deflect tickets, speed up resolution (MTTR), and preserve institutional knowledge across IT, HR, support and operations.

- Building one requires: auditing content, connecting all doc sources, tuning semantic search, setting access controls, and defining content governance.

- For real enterprise value, integrate AI KB into daily workflows: Slack/Teams retrieval, Jira/ServiceNow connectors and workflow triggers, then measure ROI via ticket deflection, resolution times and usage metrics.

What You’ll Learn

By the end of this guide you'll be able to:

- Define clearly what an AI knowledge base is and why it matters now

- Understand how modern AI KB systems retrieve, reason, and serve answers

- Identify which content types benefit most from AI-driven knowledge management

- Compare traditional, collaboration-native and retrieval-native KB platforms (and know when to choose each)

- Follow a step-by-step blueprint to build and govern an AI knowledge base for your organisation

- Integrate the AI knowledge base into support, IT, and HR workflows for maximal impact

- Evaluate success with measurable KPIs and build a continuous improvement plan

What Is an AI Knowledge Base?

Modern definition (NLP + ML + semantic retrieval)

An AI knowledge base is a dynamic, centralized digital repository that uses artificial intelligence, specifically machine learning (ML) and natural language processing (NLP), to store, understand, process and retrieve information contextually. Unlike static knowledge bases that rely on keyword matching, AI KBs interpret user intent and query context. They continuously learn from interactions and feedback, refining result quality over time without manual rework. Core components include NLP for language understanding, ML for learning and improvement, content storage for documents/data, and reasoning/ retrieval engines. This allows users to query the system in everyday language and receive accurate, context-aware answers.

Why 2026 is the “retrieval-first” era

Advancements in embeddings, vector databases, and reasoning pipelines (e.g. retrieval-augmented generation, hybrid search) have matured. Businesses of all sizes and enterprises now expect knowledge systems to be real-time, adaptive, and tuned to their domain, not static repositories with poor recall. As vendors shift focus toward semantic retrieval and integration with AI agents, 2026 stands out as the year when "retrieval-first" becomes the default approach to building KBs. Early adopters gain speed, relevance, and better ROI on documentation efforts.

Difference from traditional keyword-based systems

Traditional knowledge bases rely on manually created categories, fixed metadata, and keyword matching. Users had to phrase queries precisely; misspellings or synonyms caused misses. AI KBs add semantic understanding, using embeddings and vector search to match meaning rather than exact words. They reduce friction, surface relevant content even when phrasing differs, and shrink knowledge silos by connecting fragmented document sources.

How AI Knowledge Bases Work

Retrieval (embeddings, vector search, semantic ranking)

At the core of modern AI KB is a vector database: documents are converted into embeddings, high-dimensional vectors representing semantics. When a user asks a question, the system encodes the query into a vector and finds nearest neighbours in the vector store (via approximate nearest neighbour search). That retrieves a set of candidate documents or excerpts. The system then semantically ranks them by relevance to deliver the best matches. Vector databases and semantic search are what differentiate AI-powered KBs from legacy keyword search.

Here’s a guide on how to create a Knowledge Base: Full Read

Reasoning Layers (RAG, hybrid pipelines)

Retrieval alone isn’t enough for many organisational needs. A reasoning layer, commonly via Retrieval-Augmented Generation (RAG), overlays a generative model (or reasoning engine) on top of retrieved content. The model synthesizes concise, context-aware answers based on the retrieved material without hallucinating, because it only uses documented sources. This enables users to get direct answers even from complex, multi-document content.

Hybrid pipelines may combine vector search with traditional full-text indices, or use re-ranking, metadata filtering, or knowledge-graph reasoning for structured content. These hybrid patterns improve precision and scalability in real-world enterprise environments.

Knowledge Ingestion + Connectors

An effective AI KB must ingest both structured data (databases, spreadsheets) and unstructured data (documents, PDFs, wikis). Connectors to systems like file shares, CMS, cloud storage or services like Confluence, SharePoint, Notion are critical. A unified ingestion pipeline normalizes content, converts it to text (or extracts metadata), chunks long documents, embeds them, and indexes them into a vector store. Without connectors, the AI KB risks staying underutilized or outdated.

Permissions + Role-aware answers

Enterprise KBs require access controls. Users should only see information they are authorized for. A robust AI Knowledge Base enforces permissions via RBAC (role-based access control), SSO (single sign-on), and audit logging – ensuring sensitive content (HR, finance, compliance docs) remains secure while still searchable by eligible users. This ensures compliance without sacrificing usability.

Continuous Improvement Loop

AI KBs aren’t “set and forget.” They improve through usage metrics, feedback loops, and automated content governance. For instance, when users consistently ignore certain results or flag them as unhelpful, the system can surface those for review or trigger content updates. This continuous improvement reduces knowledge rot and keeps the repository fresh and relevant.

Why AI Knowledge Bases Matter for Support, IT & HR

24/7 self-service for employees and customers

An AI KB lets users (employees or customers) ask questions any time, even outside support hours and get instant answers. This translates to a better experience, faster resolution, and higher satisfaction. As organizations scale across time zones, 24/7 self-service becomes indispensable.

Ticket deflection + faster MTTR

Routine, repetitive queries (password resets, onboarding, policy questions, FAQ) often dominate support tickets. AI KB deflects such tickets by providing instant, accurate answers, freeing human agents for complex tasks. When complex questions come through, agents start from an informed context. That reduces mean time to resolution (MTTR) and improves support capacity without headcount ramp-up.

Consistent answers and reduced tribal knowledge

Unlike person-dependent knowledge (who remembers what?), AI Knowledge Base offers consistent, up-to-date information across the organisation. That reduces reliance on tribal knowledge, minimizes conflicting advice, and keeps institutional memory intact, even as employees join or leave.

Lower maintenance cost vs manual KB teams

Maintaining traditional KBs often requires manual tagging, curation, and constant updates, a resource-heavy process. AI KB automates much of this: ingestion pipelines, semantic tagging, feedback-based updates, and content lifecycle management. This significantly reduces overhead and keeps the knowledge base both current and scalable.

Benefits of an AI Knowledge Base

AI knowledge bases deliver measurable operational impact across support, IT, HR, and customer-facing teams. The benefits below focus on the outcomes that matter most to organisation leaders or business.

1. Immediate, Consistent Answers for Employees and Customers

Semantic retrieval and reasoning return relevant answers instantly, reducing time spent searching across Confluence, SharePoint, PDFs, and Slack threads. Everyone gets the same, validated response, eliminating inconsistent or outdated guidance.

Get to know our integrations that support Knowledge Sources -->

2. Lower Ticket Volume and Faster Resolution (MTTR)

Routine questions are resolved without agent involvement, deflecting a meaningful share of support load. When agents do step in, they start with accurate context sourced from the KB, cutting investigation time and improving MTTR.

3. Preservation of Institutional Knowledge

AI Knowledge Bases centralize distributed documentation and reduce dependence on tribal knowledge. Workflows, policies, and historical decisions stay captured and discoverable, even during turnover or rapid scaling.

4. Reduced Maintenance Costs

Ingestion pipelines, semantic tagging, automated article suggestions, and feedback loops shrink the overhead of maintaining a large KB. Teams spend less time categorizing, updating, or rewriting content manually.

5. Foundation for Workflow Automation

Retrieval-backed answers can trigger automated actions, ticket creation, access provisioning, and approvals, ensuring decisions follow documented policy. This makes the KB the operational backbone for ITSM, HR, and support automation.

Learn why AI-Powered Knowledge Bases Are the Backbone of Modern Support -->

Types of AI Knowledge Base Content

AI Knowledge Bases aren’t limited to one kind of content. They can handle a variety, structured, unstructured, static, or dynamic.

Structured SOPs and policies

Standard Operating Procedures (SOPs), policies, and compliance guidelines, usually stored as documents/spreadsheets, become searchable. Users can ask in natural language: “What’s our VPN access policy for contractors?” and get precise, up-to-date answers, even if the underlying doc is long and complex.

FAQs and troubleshooting flows

Common questions (password reset, account lockout, software install instructions) and decision trees benefit greatly. Instead of static FAQ pages, AI Knowledge Bases enables conversational retrieval: “Why can’t I access the VPN?” → returns relevant troubleshooting flow or article.

Long-form technical documentation

Product docs, architecture guidelines, API references, runbooks, these typically reside in wikis or versioned docs. An AI Knowledge Bases indexes them, making deep, technical content accessible via plain-language queries.

Visual/video-based knowledge ingestion

Modern AI Knowledge Bases can index transcripts from videos, parse documents with diagrams or images, or store metadata about visuals. Users can ask: “Show me the deployment diagram for our cloud infra,” and get linked visuals or diagrams, useful for engineering and operations teams.

High-variance content (Diagrams, PDFs, HR docs)

Documents with heterogeneous formats: PDFs, scanned docs, HR forms, compliance paperwork, often escape traditional KBs. AI KB ingestion pipelines can normalize them, extract text metadata, and make them searchable. This reduces silos, especially across departments.

Top AI Knowledge Base Softwares

Below is a comparison of typical platform categories, understanding strengths, weaknesses, and suitability for buyers evaluating AI knowledge base tools.

Why choose a retrieval-native system like Enjo:

- It unifies distributed documentation into a single source of truth. (See Enjo’s ability to consolidate Confluence, SharePoint, etc.)

- Faster & smarter indexing ensures cleaner, more reliable answers, because the system can pre-index and optimise which data to include. (Per product context)

- In-chat article references build transparency and trust. (Users see where AI got the answer.)

- Direct feedback on article quality, surface content gaps.

- The AI can suggest new article ideas when documentation gaps become evident, proactively maintaining the KB.

If your organisation runs distributed teams or multiple document repositories (IT manuals, HR policy docs, support guides), retrieval-native systems deliver long-term scalability and value that traditional or collaboration KBs cannot match.

How to Build an AI Knowledge Base

Building an AI KB is a multi-phase process. Below is a framework tailored for organisation's readiness.

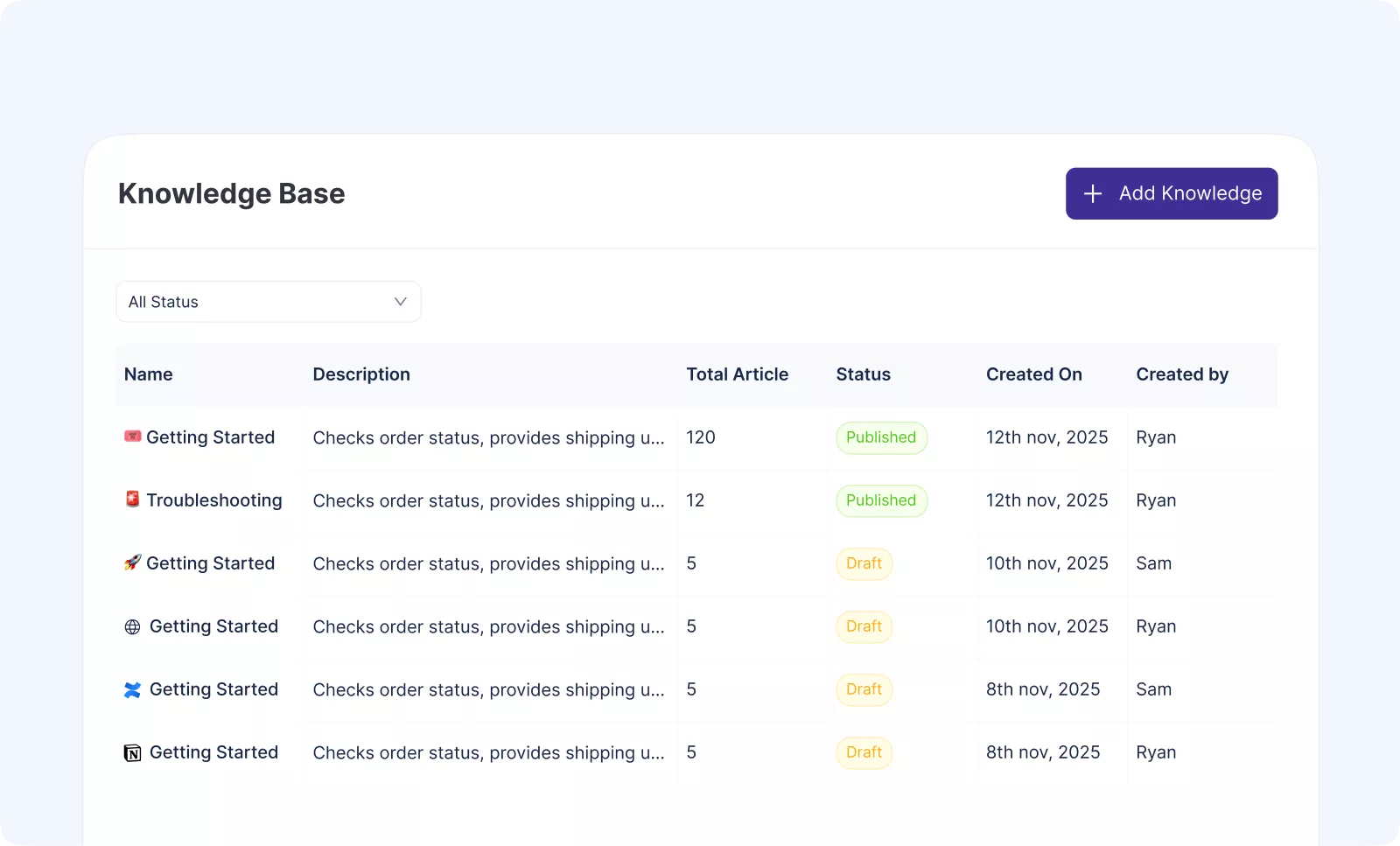

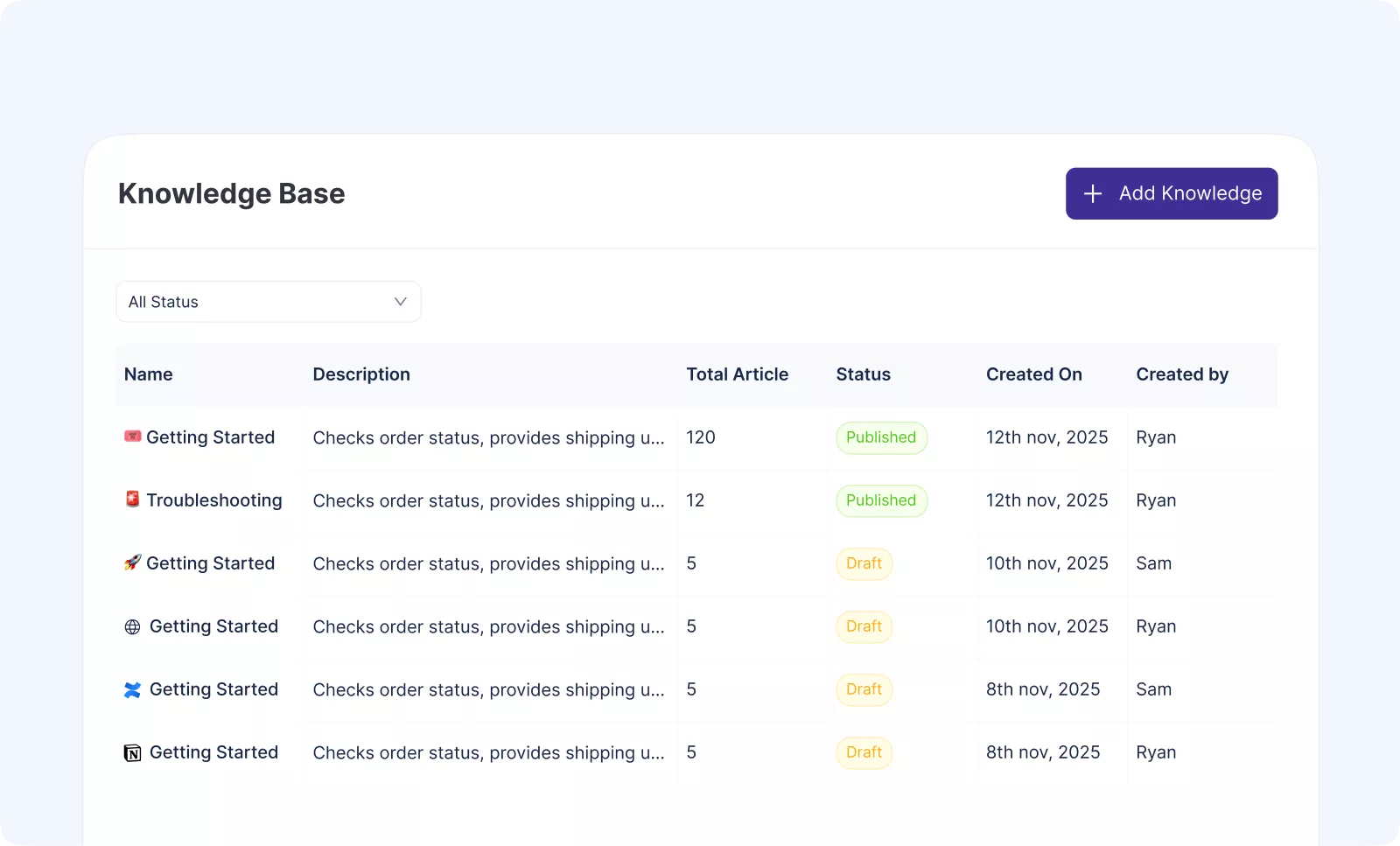

Enjo AI’s Help Centre lets teams consolidate their support documentation into one place, and instantly make it available to an AI support agent. It’s designed to be simple, fast to launch, and easy to maintain.

Phase 1 – Gather & Organise Your Content

Before creating a Help Centre, identify where your existing documentation currently lives.

Supported sources:

- Notion

- Confluence

- Your Website

- Manual articles created directly inside Enjo AI

Phase 2 – Connect Your Sources & Import Content

Enjo AI offers direct connectors for:

- Notion

- Confluence

- Public Website URLs

Once connected:

- Content is automatically fetched and transformed into Help Centre articles.

- Documents are automatically indexed for AI usage

- You can manually edit or rewrite the imported articles.

Additional features:

- AI Assistant inside each article can upgrade, rewrite, or improve your content instantly.

- Add metadata, SEO settings, category, and visibility.

Phase 3 – Add & Test Your AI Support Agent

After your documentation exists, the next step is to add your AI agent to the Help Centre. Steps:

- Open Help Centre → Settings.

- Add an AI Agent and link it to this Help Centre.

- Start testing by asking real customer questions.

- Observe the answers:

- If the response is incomplete or inaccurate → update the corresponding article.

- Re-test until answers become consistent.

Phase 4 – Customise, Configure & Publish

Once the agent is answering well, finish setup using:

- Appearance

- Add your logo

- Set title

- Choose colours

Installation:

- Connect a custom domain

- Enable/disable search engine indexing

- Set visibility (public, private)

- Allow AI agents to use this Help Centre as a knowledge source

Settings:

- Control AI Agent Access

- Decide if the AI Agent is customer-facing or internal-only

Contextual Reading: A Definitive Guide to Customer Service Automation -->

Phase 5 – Maintain & Update Your Help Centre

While Enjo AI doesn’t enforce governance workflows, teams can follow a lightweight maintenance strategy:

- Periodically review and update key articles.

- Use the article-level AI to improve clarity and accuracy.

- Test the AI agent after important updates.

- Re-import content if you update Notion or Confluence pages.

- Unpublish outdated articles instead of deleting them immediately.

This keeps your Help Centre and AI agent up to date with minimal overhead.

Integrating an AI Knowledge Base With Daily Workflows

For maximum impact, AI KB must be embedded into daily work tools and workflows, not left as a separate portal.

Slack / Teams retrieval

Connect the AI knowledge base to collaboration tools (e.g., Slack, Microsoft Teams). Users can query the KB directly in their workflow. That reduces context switching, speeds up answers, and embeds knowledge access where work already happens.

Integrating with Jira / ServiceNow

When a query suggests a ticket (e.g., “I need access to X system”), the AI agent can trigger a workflow, create a ticket in Jira or ServiceNow automatically, using retrieved docs as context. That accelerates request handling and reduces manual ticket creation.

Triggering workflows from retrieved content

Beyond lookups: certain retrieved content (e.g. access policy, compliance requirements) may trigger actions, approvals, notifications, or orchestration workflows. Embedding an AI Knowledge Base in workflow automation ensures decisions follow documented policy and reduces the risk of human error.

Conclusion: 5-Item Action Checklist

- Inventory all documentation sources: identify silos, duplicates, outdated content.

- Pilot an AI knowledge base (connect 2–3 doc sources, ingest and index content).

- Tune semantic search and RAG settings; test with real user queries.

- Integrate the AI KB with daily tools (Slack/Teams, Jira/ServiceNow).

- Define content governance, review cycles, and feedback loop - track metrics: ticket deflection rate, MTTR reduction, user adoption.

Frequently Asked Questions

What is a knowledge base in AI?

An AI knowledge base is an intelligent repository that uses AI to store, index, and retrieve information using semantic search, NLP and ML, offering contextual answers rather than simple keyword matches.

What is a knowledge-based approach in AI?

This refers to using structured or unstructured domain-specific data (documents, policies, manuals) as a basis for AI systems to answer queries, instead of relying purely on general LLM training data. Retrieval-first systems, often with RAG, embody this approach.

What is a knowledge base file?

A knowledge base file denotes any document (text, PDF, spreadsheet, media) ingested into an AI KB. Once processed, it becomes part of the searchable, structured content pool used for embeddings and retrieval.

How secure are AI Knowledge Bases?

Security depends on the system. Enterprise-grade AI KBs implement SSO, role-based access control (RBAC), audit logging, and encryption in transit and at rest. Sensitive content can be restricted to authorised roles.

Can AI Knowledge Bases integrate with Confluence or SharePoint?

Yes, modern AI Knowledge Base platforms support connectors for common document repositories like Confluence and SharePoint, enabling ingestion and indexing of existing content without migration.

What You’ll Learn

By the end of this guide you'll be able to:

- Define clearly what an AI knowledge base is and why it matters now

- Understand how modern AI KB systems retrieve, reason, and serve answers

- Identify which content types benefit most from AI-driven knowledge management

- Compare traditional, collaboration-native and retrieval-native KB platforms (and know when to choose each)

- Follow a step-by-step blueprint to build and govern an AI knowledge base for your organisation

- Integrate the AI knowledge base into support, IT, and HR workflows for maximal impact

- Evaluate success with measurable KPIs and build a continuous improvement plan

What Is an AI Knowledge Base?

Modern definition (NLP + ML + semantic retrieval)

An AI knowledge base is a dynamic, centralized digital repository that uses artificial intelligence, specifically machine learning (ML) and natural language processing (NLP), to store, understand, process and retrieve information contextually. Unlike static knowledge bases that rely on keyword matching, AI KBs interpret user intent and query context. They continuously learn from interactions and feedback, refining result quality over time without manual rework. Core components include NLP for language understanding, ML for learning and improvement, content storage for documents/data, and reasoning/ retrieval engines. This allows users to query the system in everyday language and receive accurate, context-aware answers.

Why 2026 is the “retrieval-first” era

Advancements in embeddings, vector databases, and reasoning pipelines (e.g. retrieval-augmented generation, hybrid search) have matured. Businesses of all sizes and enterprises now expect knowledge systems to be real-time, adaptive, and tuned to their domain, not static repositories with poor recall. As vendors shift focus toward semantic retrieval and integration with AI agents, 2026 stands out as the year when "retrieval-first" becomes the default approach to building KBs. Early adopters gain speed, relevance, and better ROI on documentation efforts.

Difference from traditional keyword-based systems

Traditional knowledge bases rely on manually created categories, fixed metadata, and keyword matching. Users had to phrase queries precisely; misspellings or synonyms caused misses. AI KBs add semantic understanding, using embeddings and vector search to match meaning rather than exact words. They reduce friction, surface relevant content even when phrasing differs, and shrink knowledge silos by connecting fragmented document sources.

How AI Knowledge Bases Work

Retrieval (embeddings, vector search, semantic ranking)

At the core of modern AI KB is a vector database: documents are converted into embeddings, high-dimensional vectors representing semantics. When a user asks a question, the system encodes the query into a vector and finds nearest neighbours in the vector store (via approximate nearest neighbour search). That retrieves a set of candidate documents or excerpts. The system then semantically ranks them by relevance to deliver the best matches. Vector databases and semantic search are what differentiate AI-powered KBs from legacy keyword search.

Here’s a guide on how to create a Knowledge Base: Full Read

Reasoning Layers (RAG, hybrid pipelines)

Retrieval alone isn’t enough for many organisational needs. A reasoning layer, commonly via Retrieval-Augmented Generation (RAG), overlays a generative model (or reasoning engine) on top of retrieved content. The model synthesizes concise, context-aware answers based on the retrieved material without hallucinating, because it only uses documented sources. This enables users to get direct answers even from complex, multi-document content.

Hybrid pipelines may combine vector search with traditional full-text indices, or use re-ranking, metadata filtering, or knowledge-graph reasoning for structured content. These hybrid patterns improve precision and scalability in real-world enterprise environments.

Knowledge Ingestion + Connectors

An effective AI KB must ingest both structured data (databases, spreadsheets) and unstructured data (documents, PDFs, wikis). Connectors to systems like file shares, CMS, cloud storage or services like Confluence, SharePoint, Notion are critical. A unified ingestion pipeline normalizes content, converts it to text (or extracts metadata), chunks long documents, embeds them, and indexes them into a vector store. Without connectors, the AI KB risks staying underutilized or outdated.

Permissions + Role-aware answers

Enterprise KBs require access controls. Users should only see information they are authorized for. A robust AI Knowledge Base enforces permissions via RBAC (role-based access control), SSO (single sign-on), and audit logging – ensuring sensitive content (HR, finance, compliance docs) remains secure while still searchable by eligible users. This ensures compliance without sacrificing usability.

Continuous Improvement Loop

AI KBs aren’t “set and forget.” They improve through usage metrics, feedback loops, and automated content governance. For instance, when users consistently ignore certain results or flag them as unhelpful, the system can surface those for review or trigger content updates. This continuous improvement reduces knowledge rot and keeps the repository fresh and relevant.

Why AI Knowledge Bases Matter for Support, IT & HR

24/7 self-service for employees and customers

An AI KB lets users (employees or customers) ask questions any time, even outside support hours and get instant answers. This translates to a better experience, faster resolution, and higher satisfaction. As organizations scale across time zones, 24/7 self-service becomes indispensable.

Ticket deflection + faster MTTR

Routine, repetitive queries (password resets, onboarding, policy questions, FAQ) often dominate support tickets. AI KB deflects such tickets by providing instant, accurate answers, freeing human agents for complex tasks. When complex questions come through, agents start from an informed context. That reduces mean time to resolution (MTTR) and improves support capacity without headcount ramp-up.

Consistent answers and reduced tribal knowledge

Unlike person-dependent knowledge (who remembers what?), AI Knowledge Base offers consistent, up-to-date information across the organisation. That reduces reliance on tribal knowledge, minimizes conflicting advice, and keeps institutional memory intact, even as employees join or leave.

Lower maintenance cost vs manual KB teams

Maintaining traditional KBs often requires manual tagging, curation, and constant updates, a resource-heavy process. AI KB automates much of this: ingestion pipelines, semantic tagging, feedback-based updates, and content lifecycle management. This significantly reduces overhead and keeps the knowledge base both current and scalable.

Benefits of an AI Knowledge Base

AI knowledge bases deliver measurable operational impact across support, IT, HR, and customer-facing teams. The benefits below focus on the outcomes that matter most to organisation leaders or business.

1. Immediate, Consistent Answers for Employees and Customers

Semantic retrieval and reasoning return relevant answers instantly, reducing time spent searching across Confluence, SharePoint, PDFs, and Slack threads. Everyone gets the same, validated response, eliminating inconsistent or outdated guidance.

Get to know our integrations that support Knowledge Sources -->

2. Lower Ticket Volume and Faster Resolution (MTTR)

Routine questions are resolved without agent involvement, deflecting a meaningful share of support load. When agents do step in, they start with accurate context sourced from the KB, cutting investigation time and improving MTTR.

3. Preservation of Institutional Knowledge

AI Knowledge Bases centralize distributed documentation and reduce dependence on tribal knowledge. Workflows, policies, and historical decisions stay captured and discoverable, even during turnover or rapid scaling.

4. Reduced Maintenance Costs

Ingestion pipelines, semantic tagging, automated article suggestions, and feedback loops shrink the overhead of maintaining a large KB. Teams spend less time categorizing, updating, or rewriting content manually.

5. Foundation for Workflow Automation

Retrieval-backed answers can trigger automated actions, ticket creation, access provisioning, and approvals, ensuring decisions follow documented policy. This makes the KB the operational backbone for ITSM, HR, and support automation.

Learn why AI-Powered Knowledge Bases Are the Backbone of Modern Support -->

Types of AI Knowledge Base Content

AI Knowledge Bases aren’t limited to one kind of content. They can handle a variety, structured, unstructured, static, or dynamic.

Structured SOPs and policies

Standard Operating Procedures (SOPs), policies, and compliance guidelines, usually stored as documents/spreadsheets, become searchable. Users can ask in natural language: “What’s our VPN access policy for contractors?” and get precise, up-to-date answers, even if the underlying doc is long and complex.

FAQs and troubleshooting flows

Common questions (password reset, account lockout, software install instructions) and decision trees benefit greatly. Instead of static FAQ pages, AI Knowledge Bases enables conversational retrieval: “Why can’t I access the VPN?” → returns relevant troubleshooting flow or article.

Long-form technical documentation

Product docs, architecture guidelines, API references, runbooks, these typically reside in wikis or versioned docs. An AI Knowledge Bases indexes them, making deep, technical content accessible via plain-language queries.

Visual/video-based knowledge ingestion

Modern AI Knowledge Bases can index transcripts from videos, parse documents with diagrams or images, or store metadata about visuals. Users can ask: “Show me the deployment diagram for our cloud infra,” and get linked visuals or diagrams, useful for engineering and operations teams.

High-variance content (Diagrams, PDFs, HR docs)

Documents with heterogeneous formats: PDFs, scanned docs, HR forms, compliance paperwork, often escape traditional KBs. AI KB ingestion pipelines can normalize them, extract text metadata, and make them searchable. This reduces silos, especially across departments.

Top AI Knowledge Base Softwares

Below is a comparison of typical platform categories, understanding strengths, weaknesses, and suitability for buyers evaluating AI knowledge base tools.

Why choose a retrieval-native system like Enjo:

- It unifies distributed documentation into a single source of truth. (See Enjo’s ability to consolidate Confluence, SharePoint, etc.)

- Faster & smarter indexing ensures cleaner, more reliable answers, because the system can pre-index and optimise which data to include. (Per product context)

- In-chat article references build transparency and trust. (Users see where AI got the answer.)

- Direct feedback on article quality, surface content gaps.

- The AI can suggest new article ideas when documentation gaps become evident, proactively maintaining the KB.

If your organisation runs distributed teams or multiple document repositories (IT manuals, HR policy docs, support guides), retrieval-native systems deliver long-term scalability and value that traditional or collaboration KBs cannot match.

How to Build an AI Knowledge Base

Building an AI KB is a multi-phase process. Below is a framework tailored for organisation's readiness.

Enjo AI’s Help Centre lets teams consolidate their support documentation into one place, and instantly make it available to an AI support agent. It’s designed to be simple, fast to launch, and easy to maintain.

Phase 1 – Gather & Organise Your Content

Before creating a Help Centre, identify where your existing documentation currently lives.

Supported sources:

- Notion

- Confluence

- Your Website

- Manual articles created directly inside Enjo AI

Phase 2 – Connect Your Sources & Import Content

Enjo AI offers direct connectors for:

- Notion

- Confluence

- Public Website URLs

Once connected:

- Content is automatically fetched and transformed into Help Centre articles.

- Documents are automatically indexed for AI usage

- You can manually edit or rewrite the imported articles.

Additional features:

- AI Assistant inside each article can upgrade, rewrite, or improve your content instantly.

- Add metadata, SEO settings, category, and visibility.

Phase 3 – Add & Test Your AI Support Agent

After your documentation exists, the next step is to add your AI agent to the Help Centre. Steps:

- Open Help Centre → Settings.

- Add an AI Agent and link it to this Help Centre.

- Start testing by asking real customer questions.

- Observe the answers:

- If the response is incomplete or inaccurate → update the corresponding article.

- Re-test until answers become consistent.

Phase 4 – Customise, Configure & Publish

Once the agent is answering well, finish setup using:

- Appearance

- Add your logo

- Set title

- Choose colours

Installation:

- Connect a custom domain

- Enable/disable search engine indexing

- Set visibility (public, private)

- Allow AI agents to use this Help Centre as a knowledge source

Settings:

- Control AI Agent Access

- Decide if the AI Agent is customer-facing or internal-only

Contextual Reading: A Definitive Guide to Customer Service Automation -->

Phase 5 – Maintain & Update Your Help Centre

While Enjo AI doesn’t enforce governance workflows, teams can follow a lightweight maintenance strategy:

- Periodically review and update key articles.

- Use the article-level AI to improve clarity and accuracy.

- Test the AI agent after important updates.

- Re-import content if you update Notion or Confluence pages.

- Unpublish outdated articles instead of deleting them immediately.

This keeps your Help Centre and AI agent up to date with minimal overhead.

Integrating an AI Knowledge Base With Daily Workflows

For maximum impact, AI KB must be embedded into daily work tools and workflows, not left as a separate portal.

Slack / Teams retrieval

Connect the AI knowledge base to collaboration tools (e.g., Slack, Microsoft Teams). Users can query the KB directly in their workflow. That reduces context switching, speeds up answers, and embeds knowledge access where work already happens.

Integrating with Jira / ServiceNow

When a query suggests a ticket (e.g., “I need access to X system”), the AI agent can trigger a workflow, create a ticket in Jira or ServiceNow automatically, using retrieved docs as context. That accelerates request handling and reduces manual ticket creation.

Triggering workflows from retrieved content

Beyond lookups: certain retrieved content (e.g. access policy, compliance requirements) may trigger actions, approvals, notifications, or orchestration workflows. Embedding an AI Knowledge Base in workflow automation ensures decisions follow documented policy and reduces the risk of human error.

Conclusion: 5-Item Action Checklist

- Inventory all documentation sources: identify silos, duplicates, outdated content.

- Pilot an AI knowledge base (connect 2–3 doc sources, ingest and index content).

- Tune semantic search and RAG settings; test with real user queries.

- Integrate the AI KB with daily tools (Slack/Teams, Jira/ServiceNow).

- Define content governance, review cycles, and feedback loop - track metrics: ticket deflection rate, MTTR reduction, user adoption.

Frequently Asked Questions

What is a knowledge base in AI?

An AI knowledge base is an intelligent repository that uses AI to store, index, and retrieve information using semantic search, NLP and ML, offering contextual answers rather than simple keyword matches.

What is a knowledge-based approach in AI?

This refers to using structured or unstructured domain-specific data (documents, policies, manuals) as a basis for AI systems to answer queries, instead of relying purely on general LLM training data. Retrieval-first systems, often with RAG, embody this approach.

What is a knowledge base file?

A knowledge base file denotes any document (text, PDF, spreadsheet, media) ingested into an AI KB. Once processed, it becomes part of the searchable, structured content pool used for embeddings and retrieval.

How secure are AI Knowledge Bases?

Security depends on the system. Enterprise-grade AI KBs implement SSO, role-based access control (RBAC), audit logging, and encryption in transit and at rest. Sensitive content can be restricted to authorised roles.

Can AI Knowledge Bases integrate with Confluence or SharePoint?

Yes, modern AI Knowledge Base platforms support connectors for common document repositories like Confluence and SharePoint, enabling ingestion and indexing of existing content without migration.

See how Enjo can transform your internal support workflows

Stay Informed and Inspired