Safe, responsible

agentic AI, built to be governed

Enjo helps enterprise teams deploy autonomous AI agents that resolve requests end-to-end

without compromising security, privacy, or compliance.

What “safe agentic AI” means in Enjo

Enjo is built around four enterprise requirements

Policy-controlled behavior, always

- Block risky input and unsafe AI outputs

- Enforce topic, tone, and language standards

- Prevent PII and confidential data leakage

Central governance, local control

- Admin baseline applies across every agent

- Per-agent tightening without weakening global rules

- Track changes with clear ownership and history

Data protection built into the stack

- Encrypt data in transit and at rest by default

- Keep prompts and outputs private in processing

- Minimize data via filtering, masking, retention

Reliable automation you can trust

- Production-ready uptime and resilient infra

- Safe rollout via testing and gradual expansion

- Operational readiness for incidents and recovery

Guardrails: Enjo’s AI policy enforcement layer

Guardrails is the control plane that enforces your organization’s AI boundaries across inputs and outputs, so AI stays safe, compliant, and predictable.

What Guardrails protects against

- Accidental exposure of confidential or regulated information

- Restricted topics or prohibited guidance reaching end users

- Harmful, offensive, or policy-violating content

- Malicious or unsafe interactions in prompts and responses

How Guardrails is structured (built for enterprise)

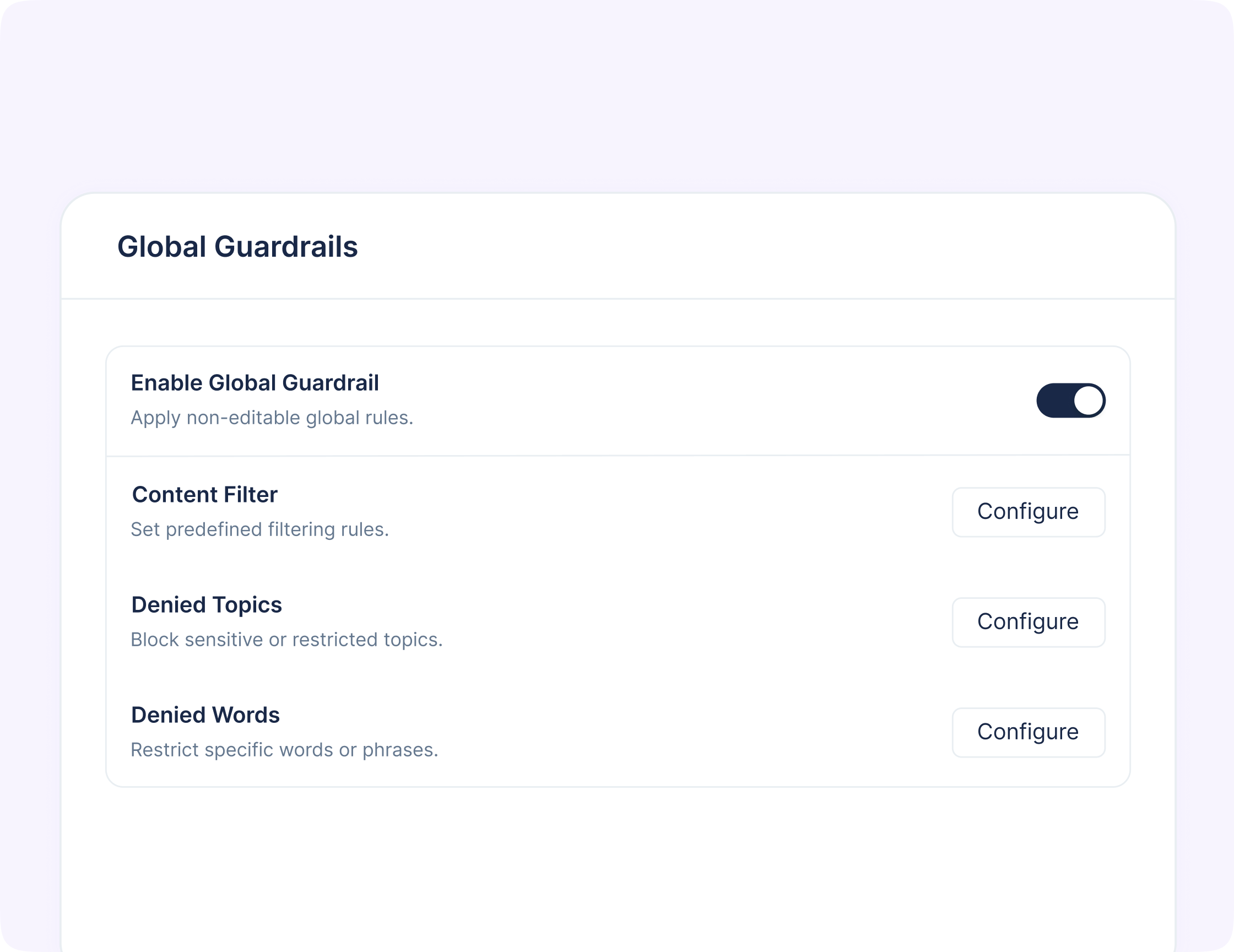

Global Guardrails (workspace-wide)

- Set by admins

- Enforced across all agents

- Designed as the non-negotiable baseline

Agent Guardrails (per agent)

- Agent admins can add stricter rules for a specific agent

- Global rules remain in force (agent guardrails add on top)

- Revert-to-global option for safe resets

What you can configure

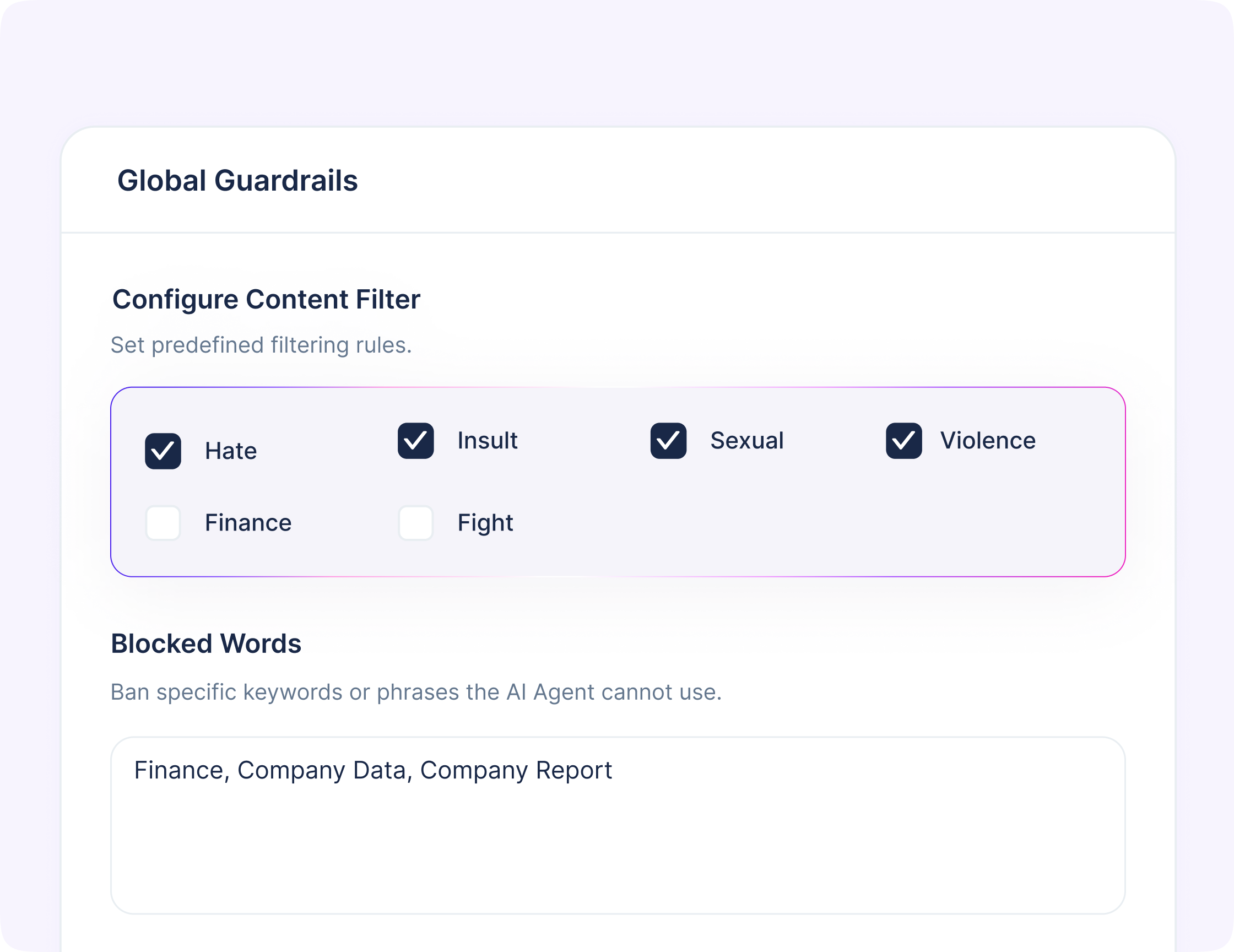

- Content filters: block predefined categories in input/output

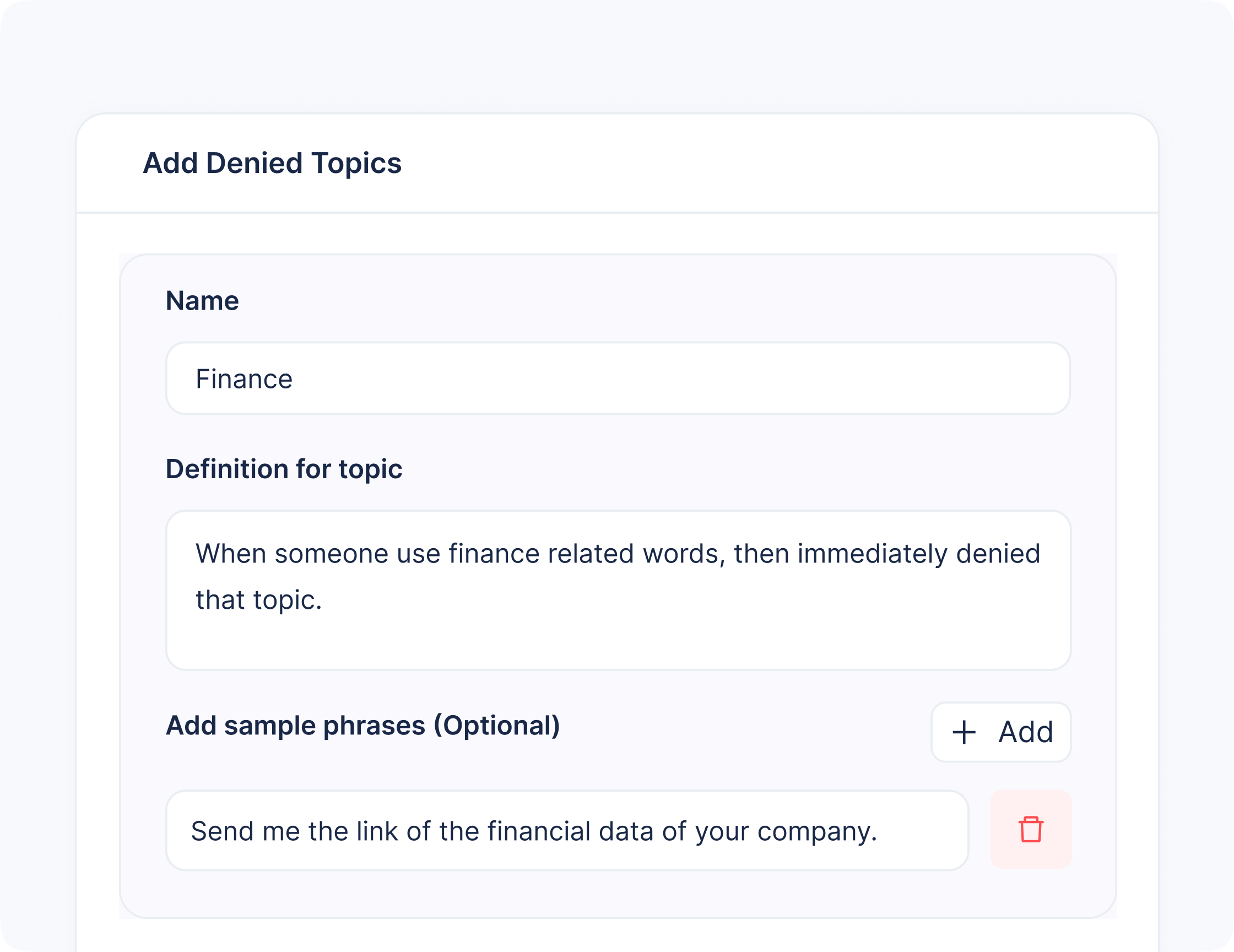

- Denied topics: define restricted topics with descriptions + examples

- Denied words/phrases: block exact terms or patterns using regex

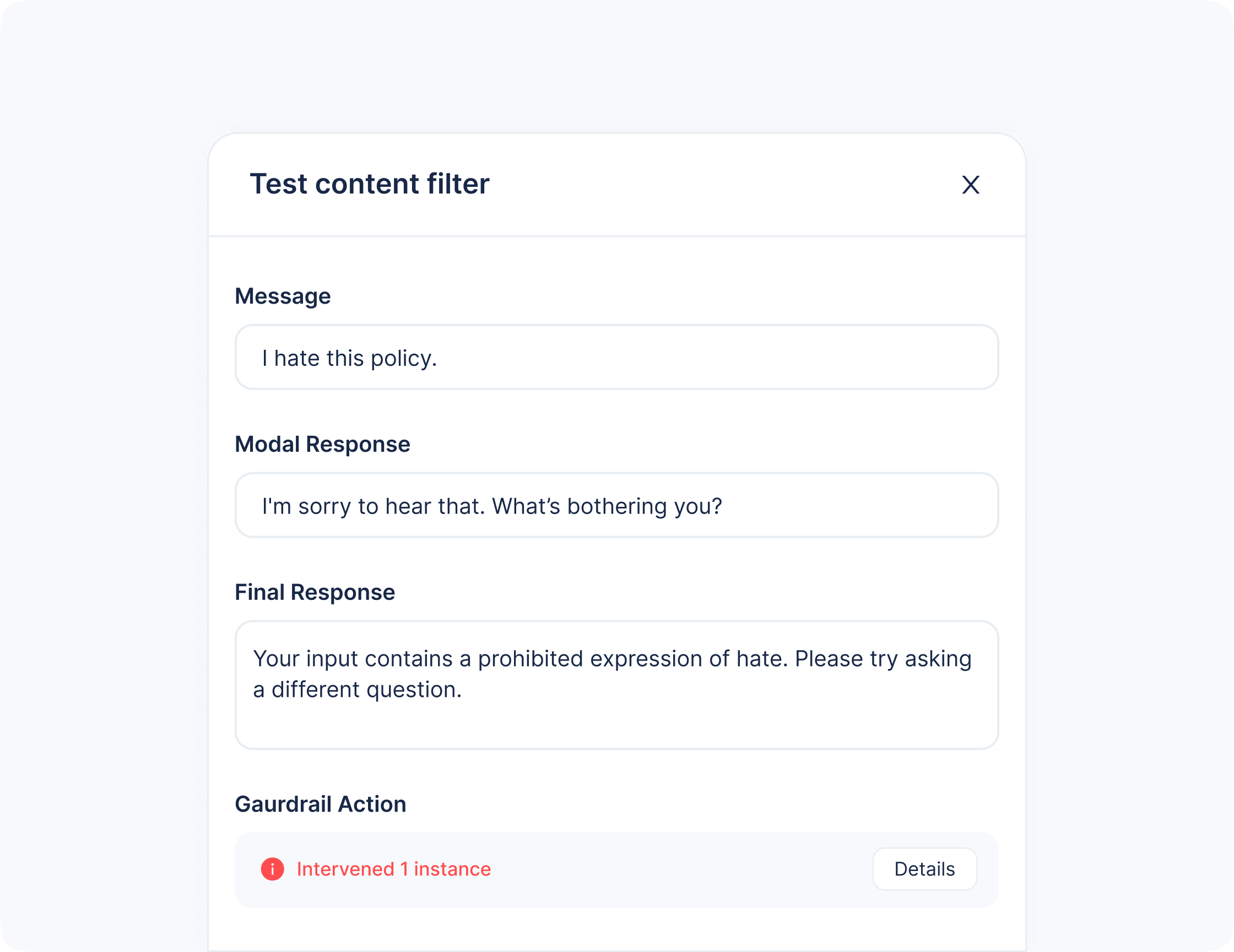

Test before you ship

Every guardrail update can be tested immediately:

- See the original AI response

- See the final response after guardrails

- Inspect which rule triggered and why

Auditability & admin control

Enterprise AI deployments require proof, not promises.

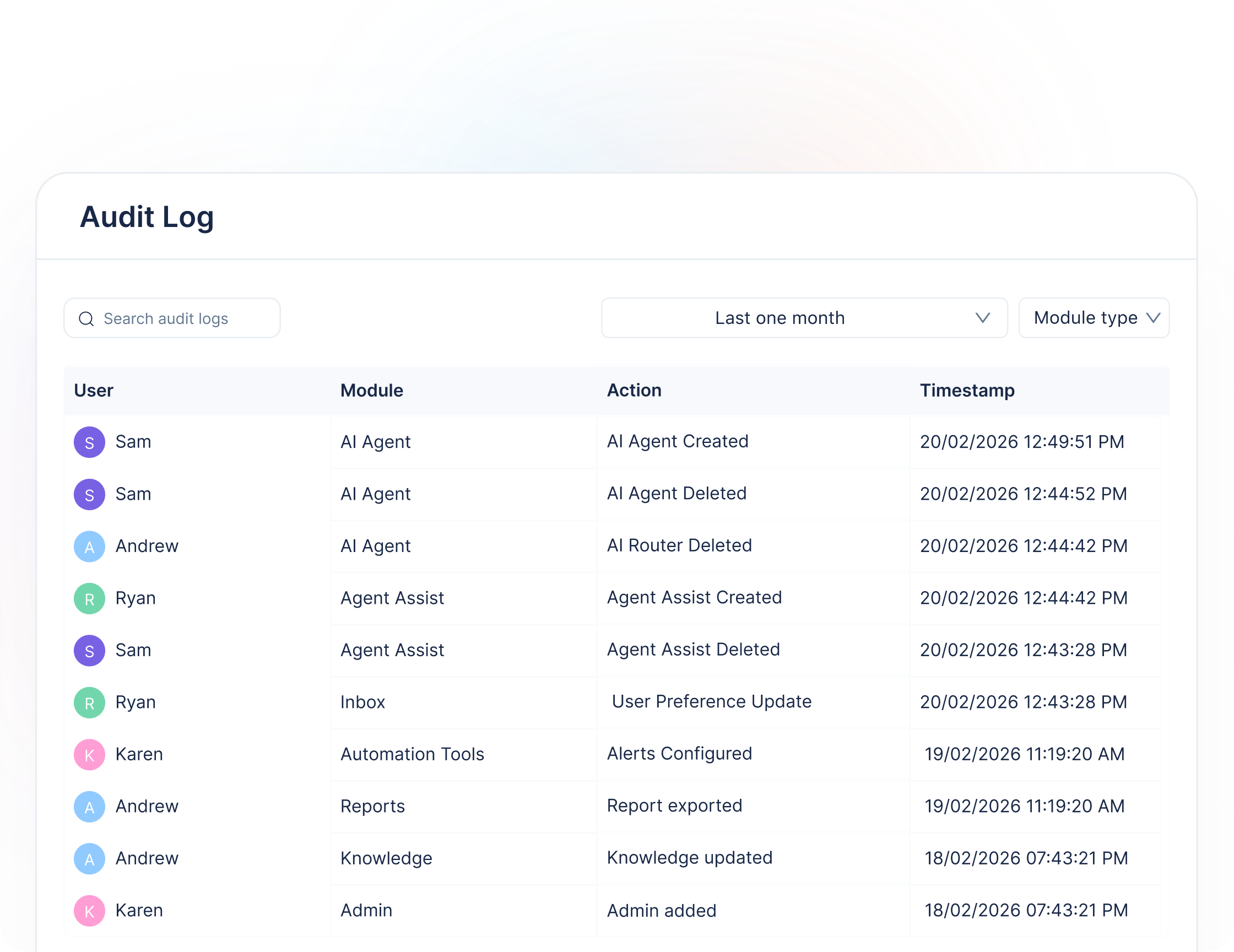

Audit Log (governance you can export)

Enjo provides an Audit Log for high-severity platform events, including:

- Adding, deleting, or updating AI agents and routers

- Workspace setting changes

- Member additions/removals and role updates

Admins can search/filter by user/action/date range and export logs (CSV) for internal review.

Least-privilege access

Admins can restrict access using roles aligned to least privilege, so users only see what they need to do their job.

Platform Security

Need the full security overview?

Compliance posture & assurance

- Annual SOC 2 Type II audit coverage

- GDPR-ready processes with SCC support

- PCI: SAQ (A-EP) + monthly external scans

Encryption & key management

- TLS 1.2 for in-transit encryption

- AES-256 at rest for data + backups

- AWS KMS keys; annual rotation policy

Tenant isolation & access controls

- Unique tenant token prevents cross-access

- Roles aligned to least-privilege access

- Restricted access to production systems

Secure operations & incident readiness

- Vendor reviews + DPAs for key providers

- Regular scanning, patching, and pen tests

- IR drills; 24-hr notice post-confirmation

Reliability & continuity

Frequently asked questions

No. Prompts, completions, embeddings, and training data are designed to remain private and are not used to improve models or services beyond your Azure resource fine-tuned models.

Yes. Global Guardrails create a workspace-wide baseline, and per-agent guardrails can add stricter rules.

Yes. Guardrails includes a built-in test that shows original vs post-guardrail responses and which rule triggered.

Use Audit Log to track high-severity events and export for security/compliance review.

Enjo services are hosted on AWS and Azure infrastructure.