Best AI Knowledge Base Software Tools for 2026: Comparison, Features and Pricing

Support teams learned the hard way that AI is only as good as the knowledge it can retrieve. With customers expecting instant, accurate answers, the era of static wikis and keyword search is over. AI-native knowledge bases now sit at the center of support operations, powering reliable automation and consistent agent performance. This guide breaks down the best AI-native knowledge base platforms and what actually matters when building a retrieval-first foundation for modern support.

What is AI Knowledge Base Software in 2026?

AI knowledge base software going into 2026 goes beyond hosting articles. It acts as a retrieval system that understands questions, pulls the right information, and delivers verified answers across every support channel.

The shift is simple: instead of forcing people to search, AI assembles the answer for them. This is driven by two realities:

- Enterprise knowledge is spread across too many tools, and

- Modern AI models need structured, high-quality information to perform reliably.

As a result, the knowledge base has become the intelligence layer that powers AI agents, reduces ticket load, and keeps organizational knowledge consistent.

Detailed Guide on: AI Knowledge Base -->

How AI Knowledge Bases Work Behind the Scenes

Modern AI knowledge bases combine three core components:

- Retrieval engine: Uses semantic search and vector indexing to understand intent, not just keywords.

- Reasoning layer: Generates answers grounded in specific, cited content rather than guessing.

- Freshness and consistency checks: Automatically flags outdated, conflicting, or missing information.

Together, they turn documentation into a dependable source of truth for both humans and AI systems.

Why 2026 Models Demand Better Information Architecture

LLMs are more powerful in 2026, but they’re also more sensitive to messy knowledge. Poor structure leads directly to unreliable answers.

Key requirements:

- Clear chunking and metadata so retrieval engines surface the right sections

- Consistent terminology across teams and documents

- Reduced duplication and cleaner version control

- Connected sources instead of scattered files across tools

Strong information architecture is now the primary driver of AI answer quality.

AI-Native vs AI-Assisted: The Real Distinction

AI-Assisted Knowledge Bases

Traditional systems with AI features added on top. They help teams search and maintain content but don’t fundamentally change support workflows.

Traits: Manual structuring, limited unification across tools, and moderate impact on automation.

AI-Native Knowledge Bases

Built to power AI from the ground up. Retrieval-first, multi-source ingestion, automated content validation, and answer generation with guardrails.

Traits: Reliable AI agents, unified knowledge, and documentation that actively drives automation.

Detailed Reading on AI Native vs AI Assisted here -->

Why Support and Ops Teams are Moving to AI-Native Knowledge Bases

Support and operations teams are shifting to AI-native knowledge bases because traditional systems can’t keep up with rising ticket volume, fragmented information, and the expectations created by modern AI agents. AI-native platforms turn documentation into an operational engine: they retrieve, assemble, and verify answers automatically, reducing manual effort across every channel.

This shift isn’t about “adding AI.” It’s about building knowledge in a way AI can reliably use.

Reduce resolution times with retrieval-aware answers

AI-native systems understand the intent behind a question and retrieve only the relevant parts of your knowledge, not an entire article. This produces faster, more accurate responses for both customers and agents. Instead of digging through docs or escalating to senior staff, the answer arrives instantly, grounded in trusted content.

The result: shorter queues, fewer back-and-forth messages, and far less variation in agent performance.

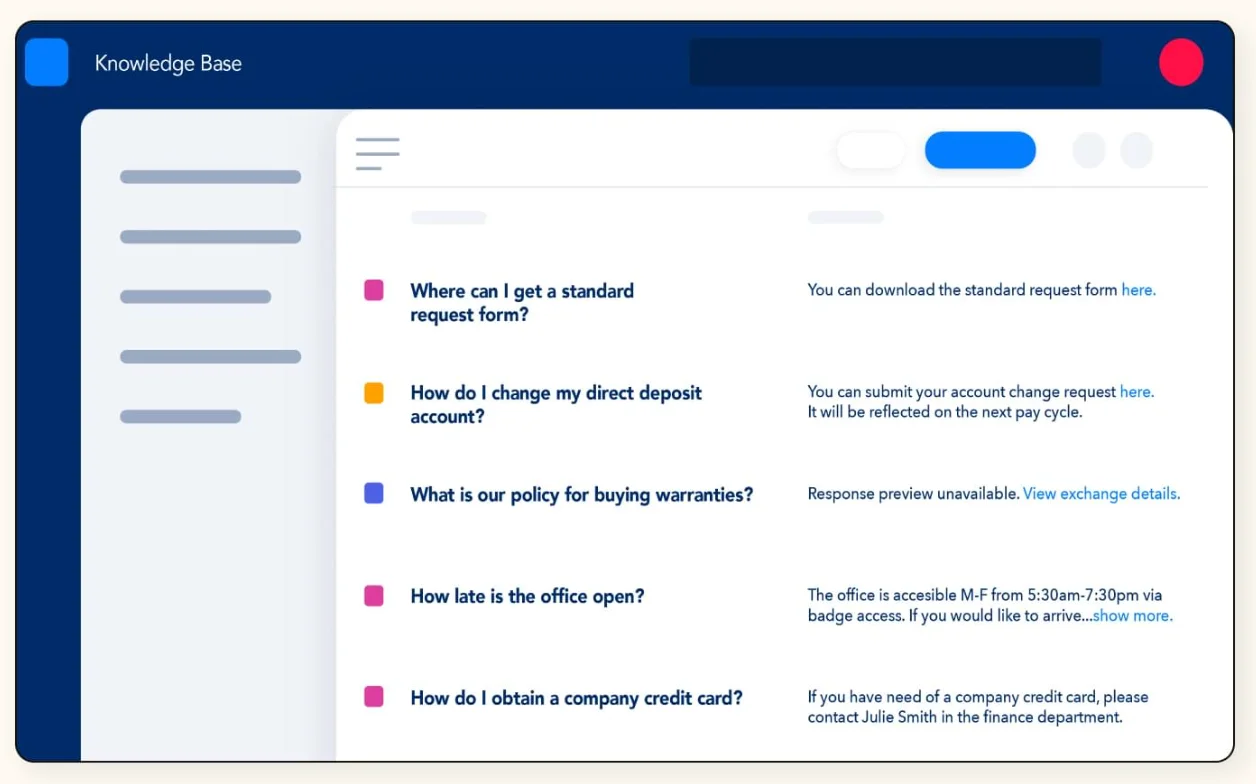

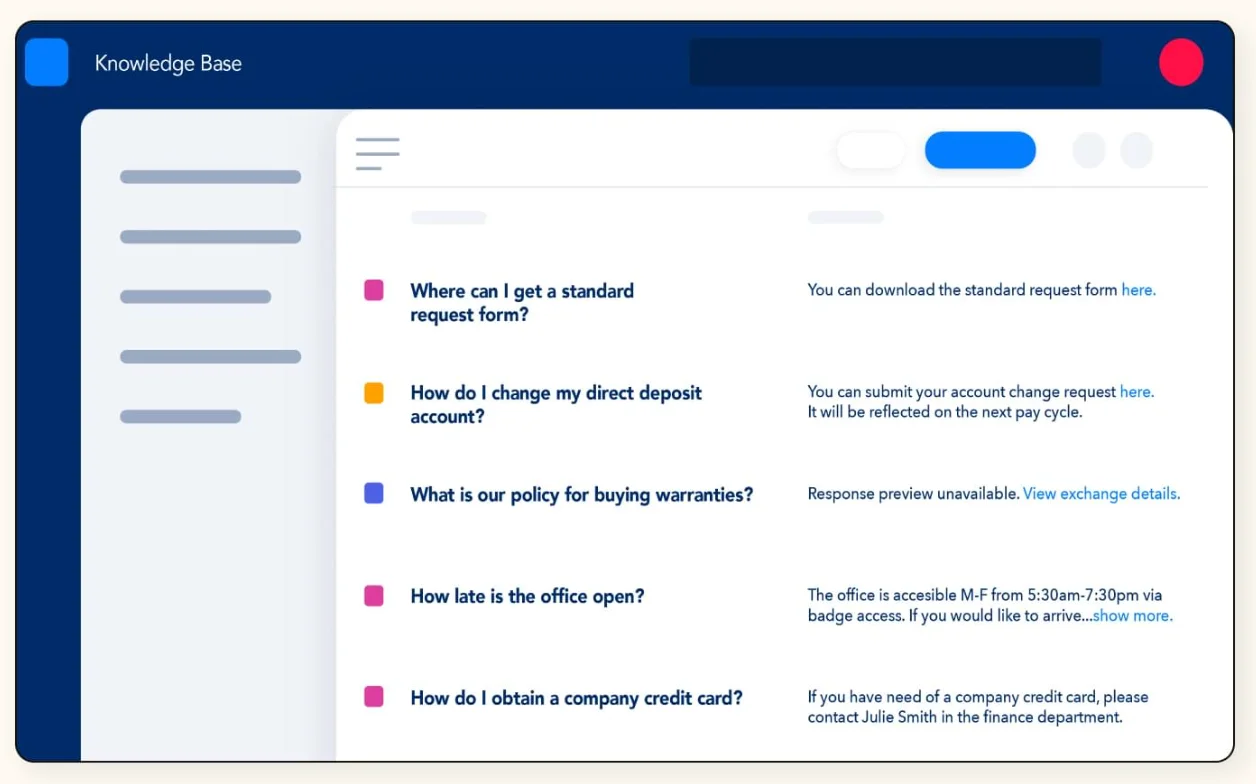

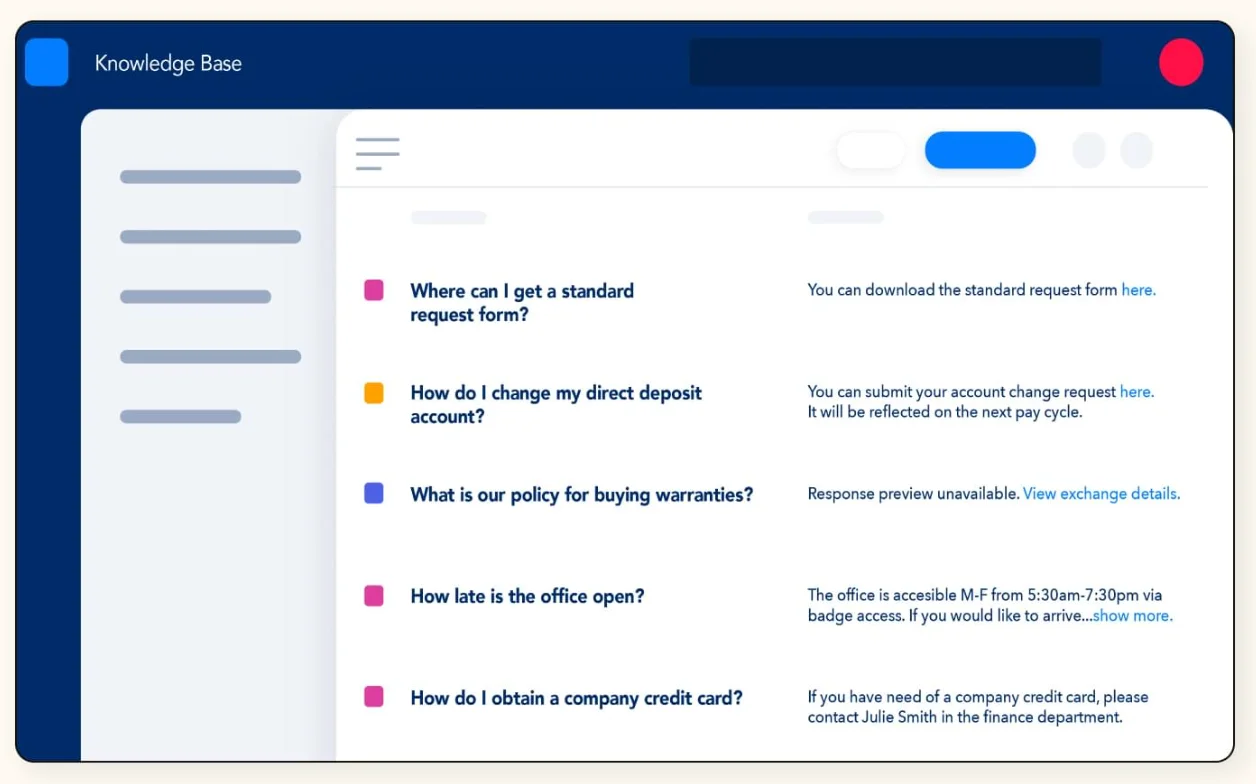

Enable self-service that doesn’t break under edge cases

Most self-service tools fail when customers phrase questions unexpectedly or ask something not covered in a perfectly structured help article. Retrieval-aware systems handle this better. They map the question to the closest matching knowledge, assemble an answer, and cite the underlying source.

Even edge cases, partial questions, unfamiliar terminology, long-tail issues are handled more gracefully. Self-service becomes dependable instead of risky.

Automate content creation, tagging, and upkeep

AI-native platforms don’t just answer questions, they help maintain the knowledge itself. They auto-tag documents, suggest missing articles, detect contradictions, and flag outdated content based on product changes or new ticket patterns.

Instead of periodic documentation “cleanups,” teams get continuous maintenance with far less manual effort.

Scale support without scaling headcount

As ticket volume grows, legacy knowledge bases force teams to add seats or rely on more manual triage. AI-native systems invert that dynamic. Retrieval, reasoning, and verification happen automatically, allowing AI agents and assisted workflows to resolve a larger share of incoming issues.

Support teams can absorb growth without constant headcount increases, improving both cost predictability and team efficiency.

Improve agent confidence with verified responses

AI-native systems generate answers with visible citations and source references, giving agents confidence that the AI is pulling from approved, up-to-date content. This eliminates the uncertainty that comes from hallucinated or outdated answers.

New agents onboard faster, experienced agents rely less on tribal knowledge, and overall accuracy improves.

The 7 Best AI Knowledge Base Platforms for 2026

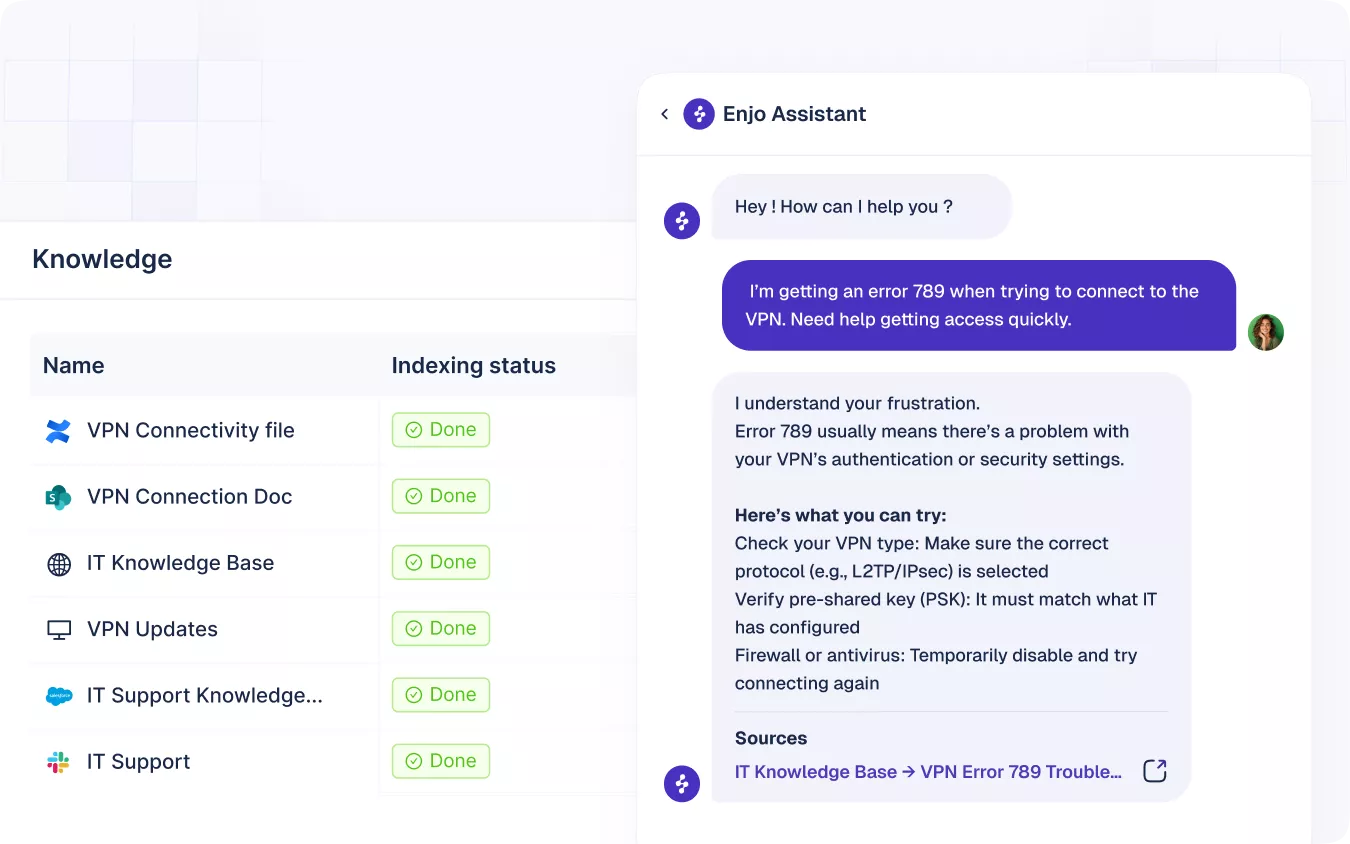

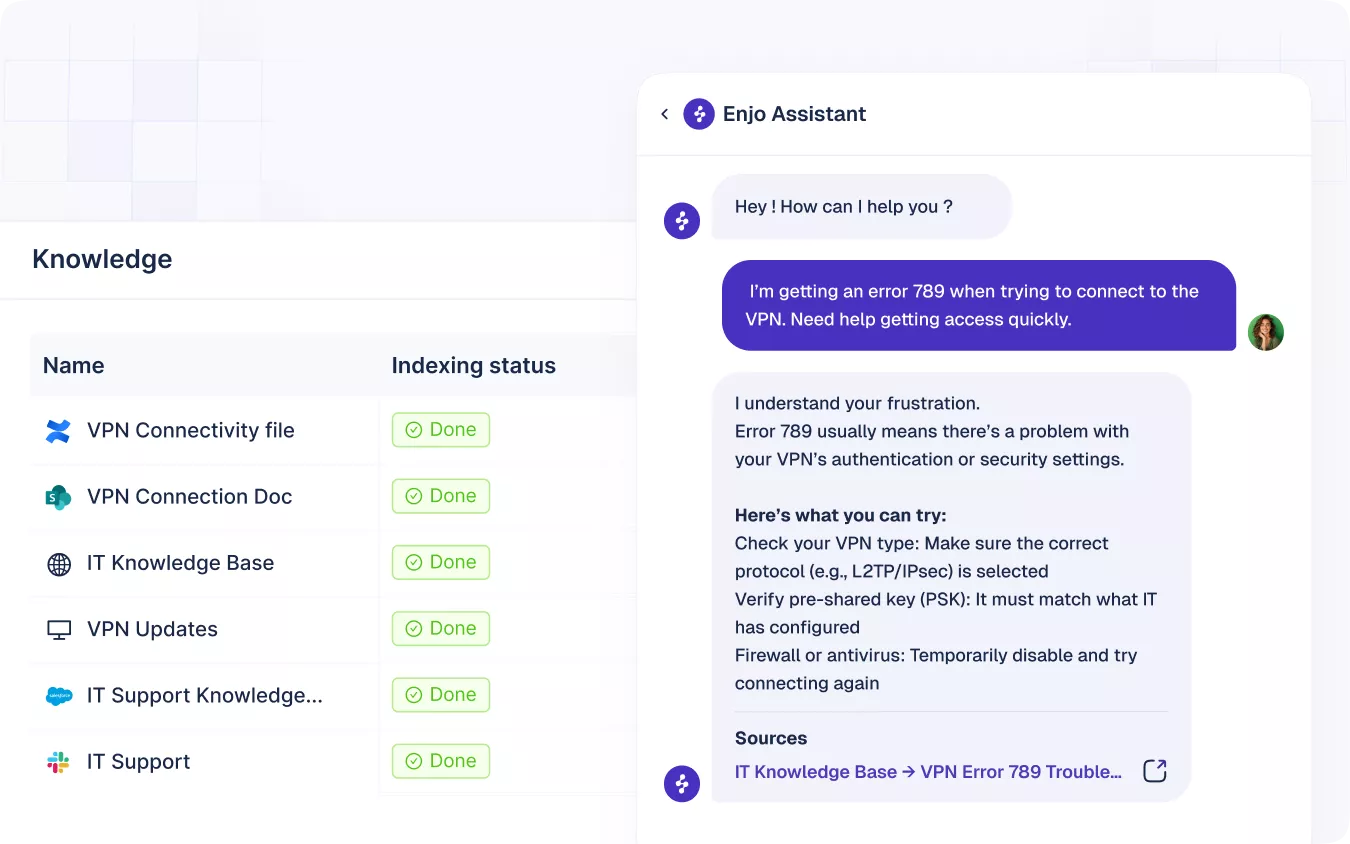

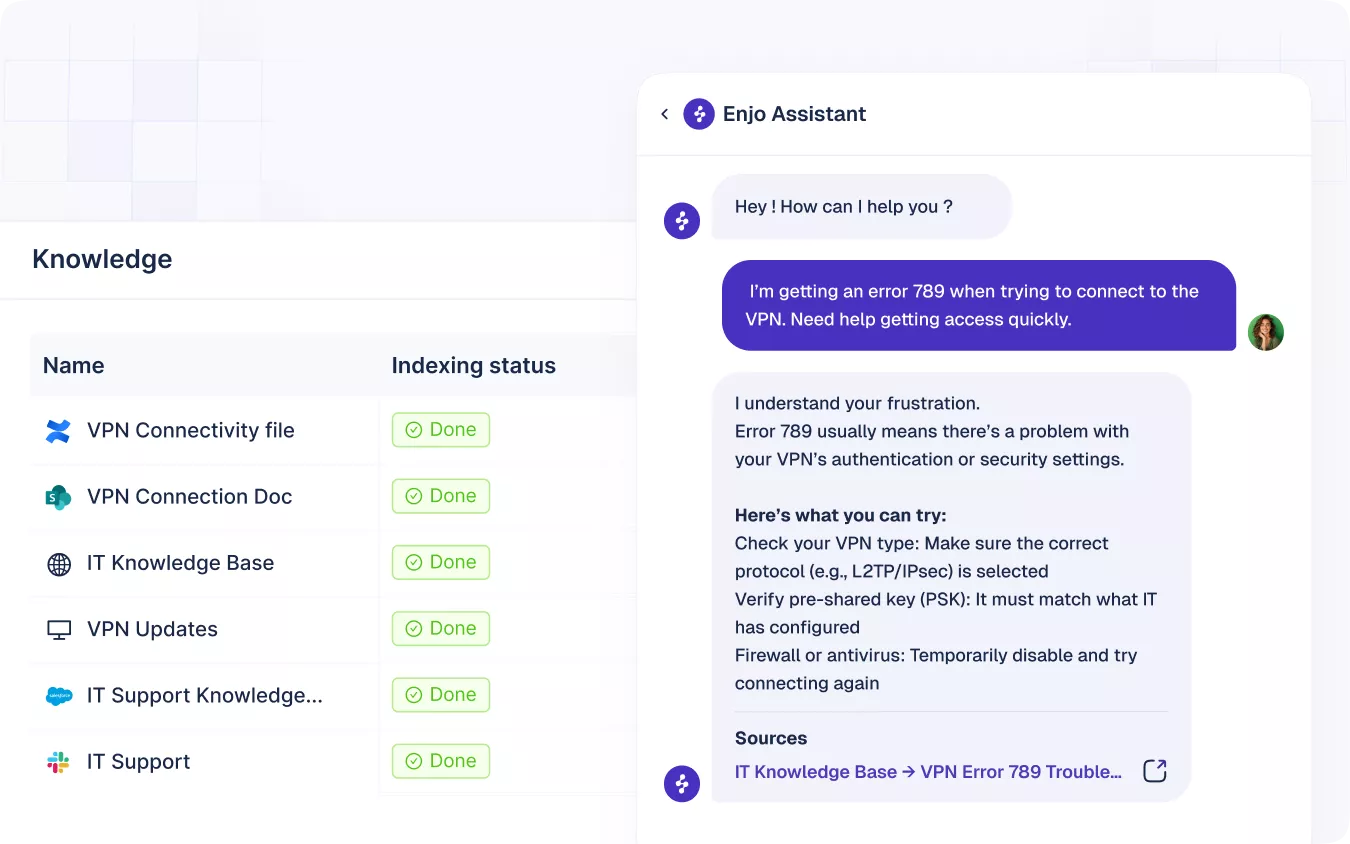

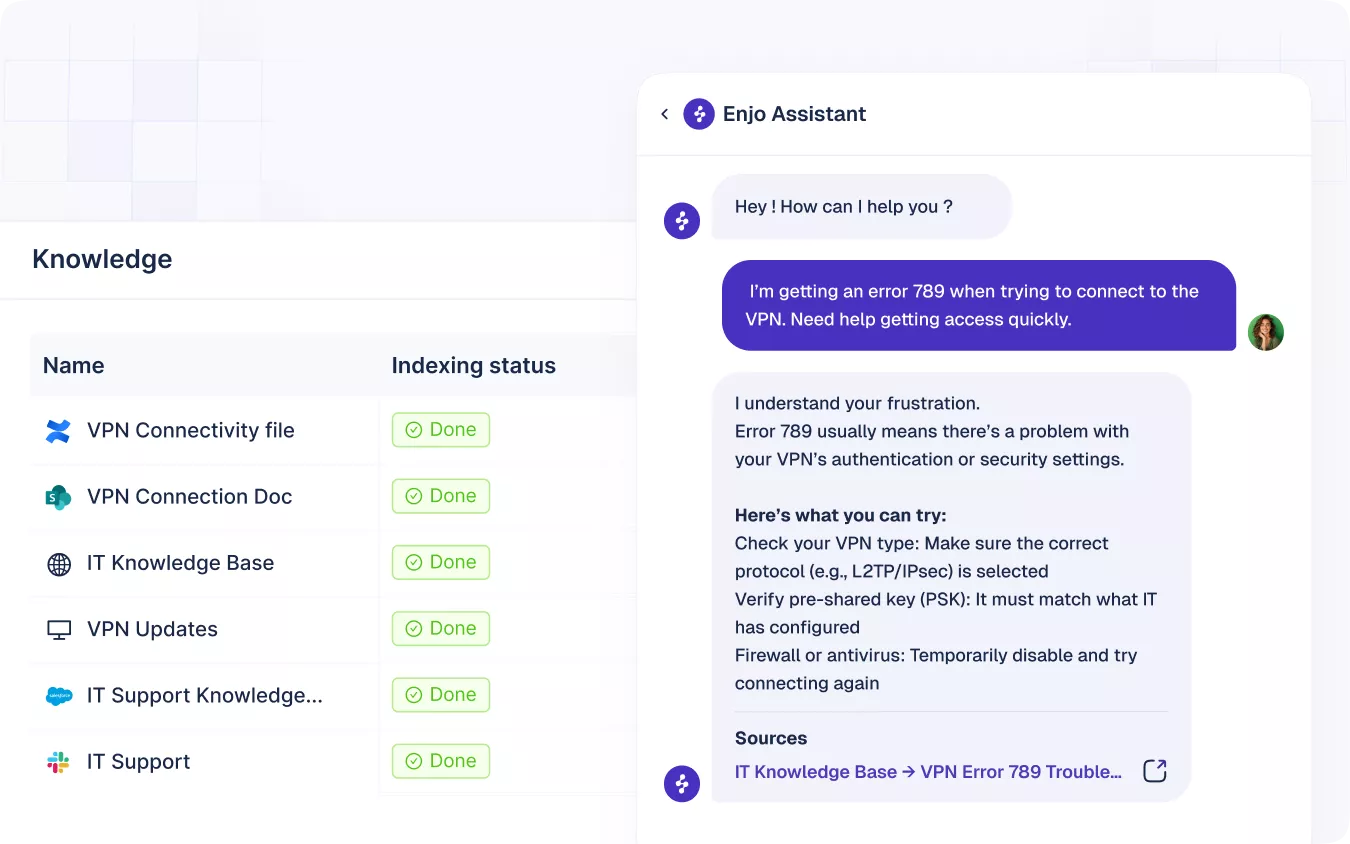

Enjo AI

Best for: IT/Ops teams (internal) and Customer Support orgs that want to layer AI automation over Slack, Teams, and WebChat without paying "per-seat" penalties for human agents.

Top AI strengths: Instant Knowledge Sync (auto-ingests Notion, Confluence, Website URLs), "Actionable" AI (triggers workflows), and unified conversational support across Web & Chat apps.

Key caveats: Core plans include strict quotas on monthly AI replies and Knowledge Blocks, requiring close monitoring for high-volume operations;

Pricing: Disruptive model. Free Tier available; Starter $95/mo; Standard $490/mo.

Crucial nuance: Plans include unlimited human agent seats and unlimited AI agents/channels.

Buyer fit: Pick Enjo if you hate per-agent pricing and want an AI layer that instantly turns your existing Confluence/Notion docs into a support bot on Slack and Web.

G2 rating: 4.8/5

Intercom

Best for: Product-led SaaS and e-commerce teams that want a single inbox for chat, email, in-app messaging and AI-driven customer conversations.

Top AI strengths: Fin AI agent (autonomous convo resolution), knowledge sourcing from help docs, seamless AI→human handoffs.

Key caveats: Usage-based AI pricing can spike unexpectedly; vendor lock-in for KB content; seat + AI costs stack.

Pricing: Helpdesk seats $29–$132/seat/mo + $0.99 per AI resolution (min. usage thresholds).

Buyer fit: Pick Intercom if you want an all-in-one product comms platform and can forecast/absorb per-resolution costs.

G2 rating: 4.5/5

Extended Reading: Best Intercom Alternatives for Customer Service Automation

Zendesk

Best for: Enterprises and large multi-team support orgs that need a full support suite (tickets, KB, chat, analytics) with AI across channels.

Top AI strengths: AI agents & copilots, generative search (natural-language), stale-content detection, omnichannel AI.

Key caveats: Knowledge tends to be siloed inside Zendesk; automation can feel rigid; setup and tuning are resource-intensive.

Pricing: Quote-based; expect enterprise tiers in the ~$100–$150+/seat/mo range for advanced AI features. AI Flows comes with add-on - Zendesk Copilot which is $50/agent for a month.

Buyer fit: Pick Zendesk if you’re an enterprise already in that ecosystem and have the team to manage a complex platform.

G2 rating: 4.3/5

Ada

Best for: Large enterprises and mid-market teams focused on ticket deflection, high conversation volumes, and transaction flows (payments, bookings).

Top AI strengths: Strong NLP Reasoning Engine, in-chat transactions, 50+ language support, prebuilt automation playbooks.

Key caveats: Opaque, high custom pricing; long sales/onboarding cycles; needs substantial historical data to perform well.

Pricing: Custom quotes; rough annual ranges widely reported from ~$4k to $70k+ for enterprise deployments.

Buyer fit: Pick Ada if you need global, transactional bots and can commit to a long, high-touch deployment.

G2 Rating: 4.6/5

Forethought

Best for: Large enterprises that need AI for ticket triage, intelligent routing, and automated deflection at scale.

Top AI strengths: Advanced triage and routing, custom intent models, KB gap detection, auto-generated automation flows.

Key caveats: Usage/deflection-based pricing penalizes success; heavy setup/tuning; needs ~20k tickets for best results.

Pricing: Quote-based; median annual cost examples around ~$74k (varies by volume & features).

Buyer fit: Pick Forethought if you have huge ticket volume, data to train custom models, and a team to manage AI ops.

G2 Rating: 4.3/5

Document360

Best for: Companies with large product documentation libraries that need AI-assisted authoring and search (SaaS, product teams, developer docs).

Top AI strengths: Eddy AI writer/search, SEO suggestions, multilingual auto-translate, integrations to ticketing tools.

Key caveats: AI features locked behind higher tiers; credit-based prompts can cause surprise costs; knowledge is siloed inside Document360.

Pricing: Plan tiers by quote; Business/Enterprise include AI features and integrations; credits included per plan.

Buyer fit: Pick Document360 if documentation is your product and you’ll pay for pro AI writing/search features.

G2 rating: 4.7/5

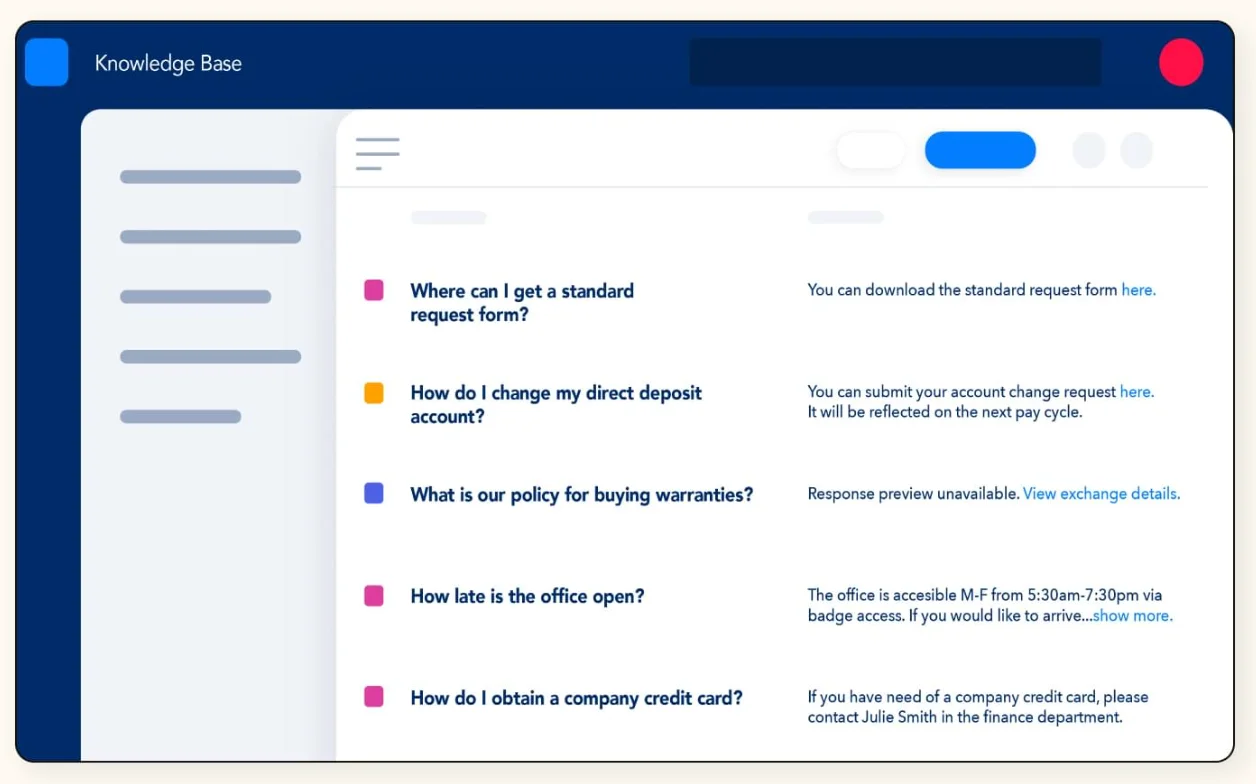

Guru

Best for: Mid→large orgs focused on internal knowledge and answers delivered inside daily tools (Slack, Teams, browser).

Top AI strengths: In-workflow AI answers, personalized results, strong HR/HRIS integrations, content verification tools.

Key caveats: Per-user pricing scales with headcount; 10-user minimum; rigid card structure can feel limiting for unusual workflows.

Pricing: ~$25/user/mo (annual billing), 10-user minimum; enterprise pricing custom.

Buyer fit: Pick Guru for internal enablement when you want answers surfaced where people already work.

G2 rating: 4.7/5

Slite

Best for: Small-to-medium teams that want an intuitive, lightweight wiki with built-in AI search (“Ask”) and clean UX.

Top AI strengths: Natural-language Ask across docs + some external sources, knowledge gap detection, Chrome extension and Slack links.

Key caveats: Per-user pricing scales with headcount; Ask limited on free tiers; integrations and API are weaker than enterprise tools.

Pricing: Free tier available; Standard ~$8/user/mo; Premium ~$16/user/mo; Enterprise custom.

Buyer fit: Pick Slite if you want a nimble, modern knowledge workspace for small teams and easy adoption.

G2 rating: 4.7/5

How to Choose the Right AI Knowledge Base in 2026

With dozens of AI tools claiming to “power support,” the real challenge is separating systems that improve answer quality from those that simply bolt AI onto old workflows. The right AI knowledge base should strengthen retrieval, unify information, and reduce operational overhead and not create another silo.

Use the following criteria to evaluate platforms meaningfully.

Evaluate retrieval quality, not just search

Legacy tools still optimize for search: keywords, article ranking, and filters. AI-native tools optimize for retrieval: understanding intent, pulling precise snippets, and generating answers grounded in source content.

What to test:

- Does the system retrieve the right 5–10% of an article or dump the whole document?

- Can it disambiguate similar queries (“reset password” vs “reset API token”)?

- Does every answer include citations?

If retrieval is weak, everything downstream, agent assist, automation, AI agents — breaks.

Consider your current support channels (Slack, Teams, or any internal platform)

Your knowledge base should meet users where the work happens. In 2026, support no longer lives in a single help desk. Teams rely on Slack threads, Teams channels, and shared documents.

Questions to ask:

- Can the AI surface answers directly inside Slack or Teams?

- Does retrieval work consistently across chat, and web?

- Can agents access verified answers without switching tools?

A knowledge base that only works inside its own UI is already outdated.

More Reading: Using Microsoft Teams as a Knowledge Base -->

Look for real-time content verification

Documentation decays quickly - product changes, pricing updates, new edge cases. Without continuous validation, AI outputs drift and accuracy erodes.

Key signals of a mature system:

- Automated stale-content detection

- Alerts for conflicting or duplicate documents

- Version tracking tied to product releases

- Confidence scoring for retrieved answers

Verification is the difference between “fast answers” and “fast wrong answers.”

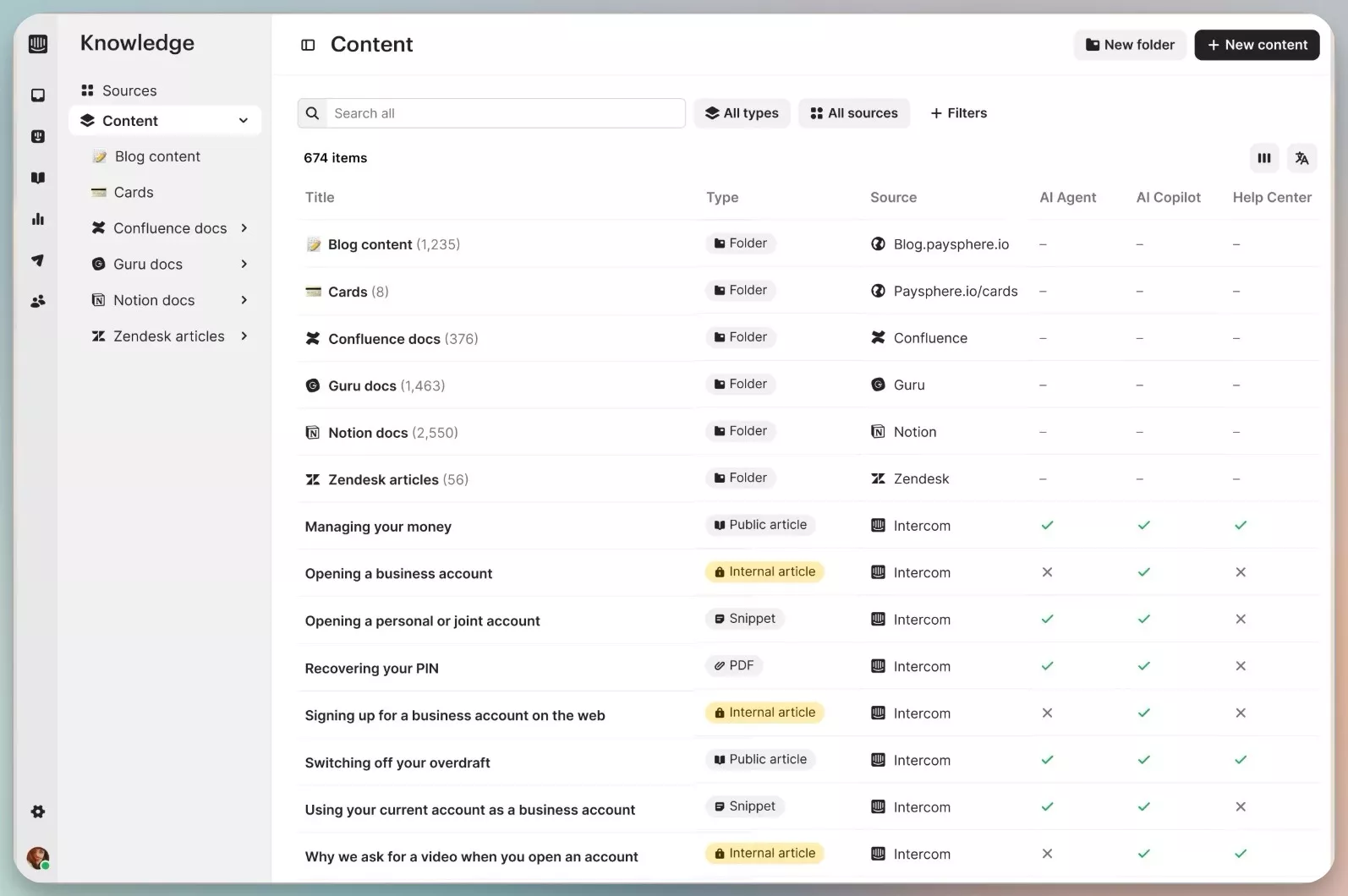

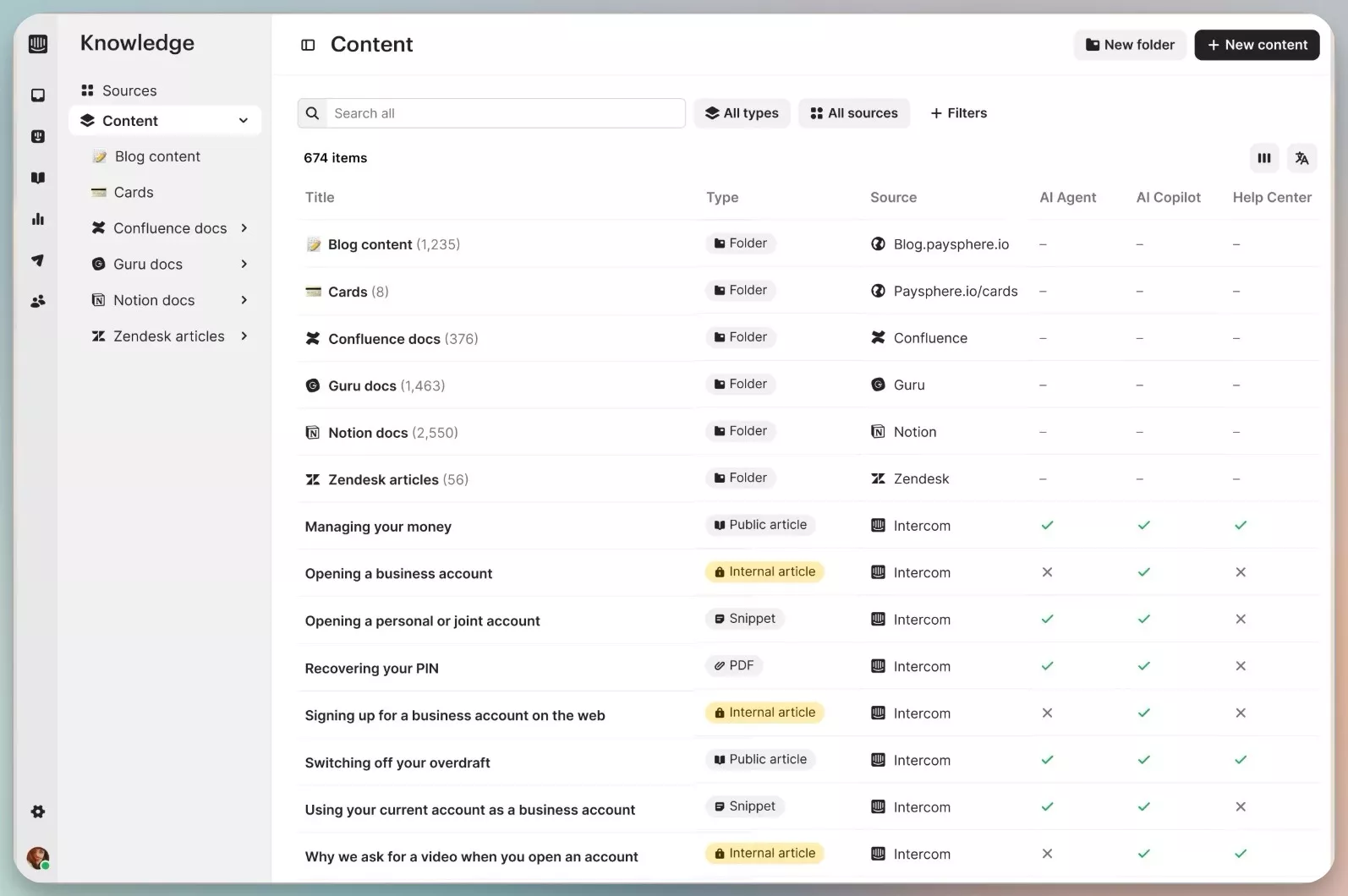

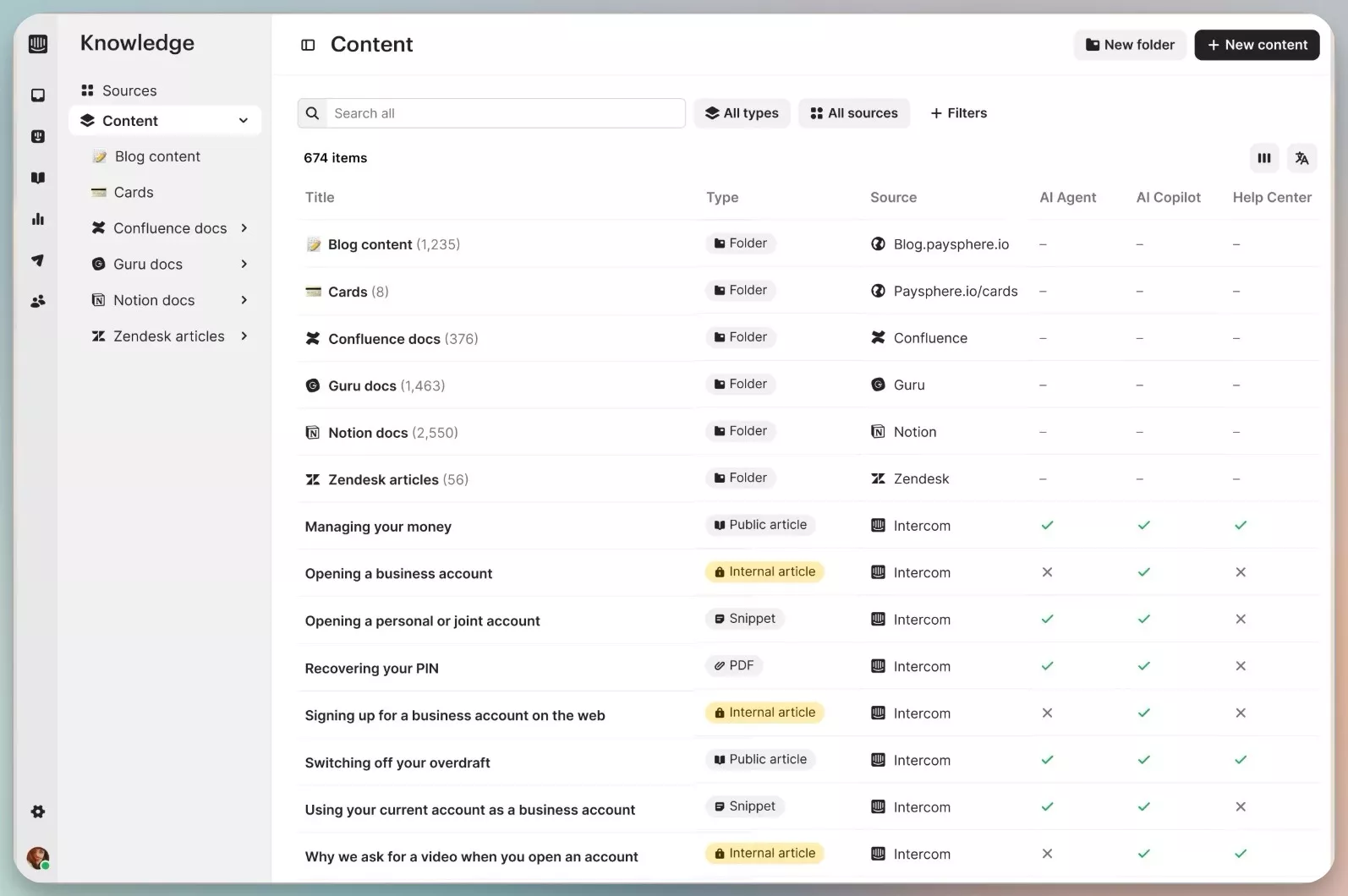

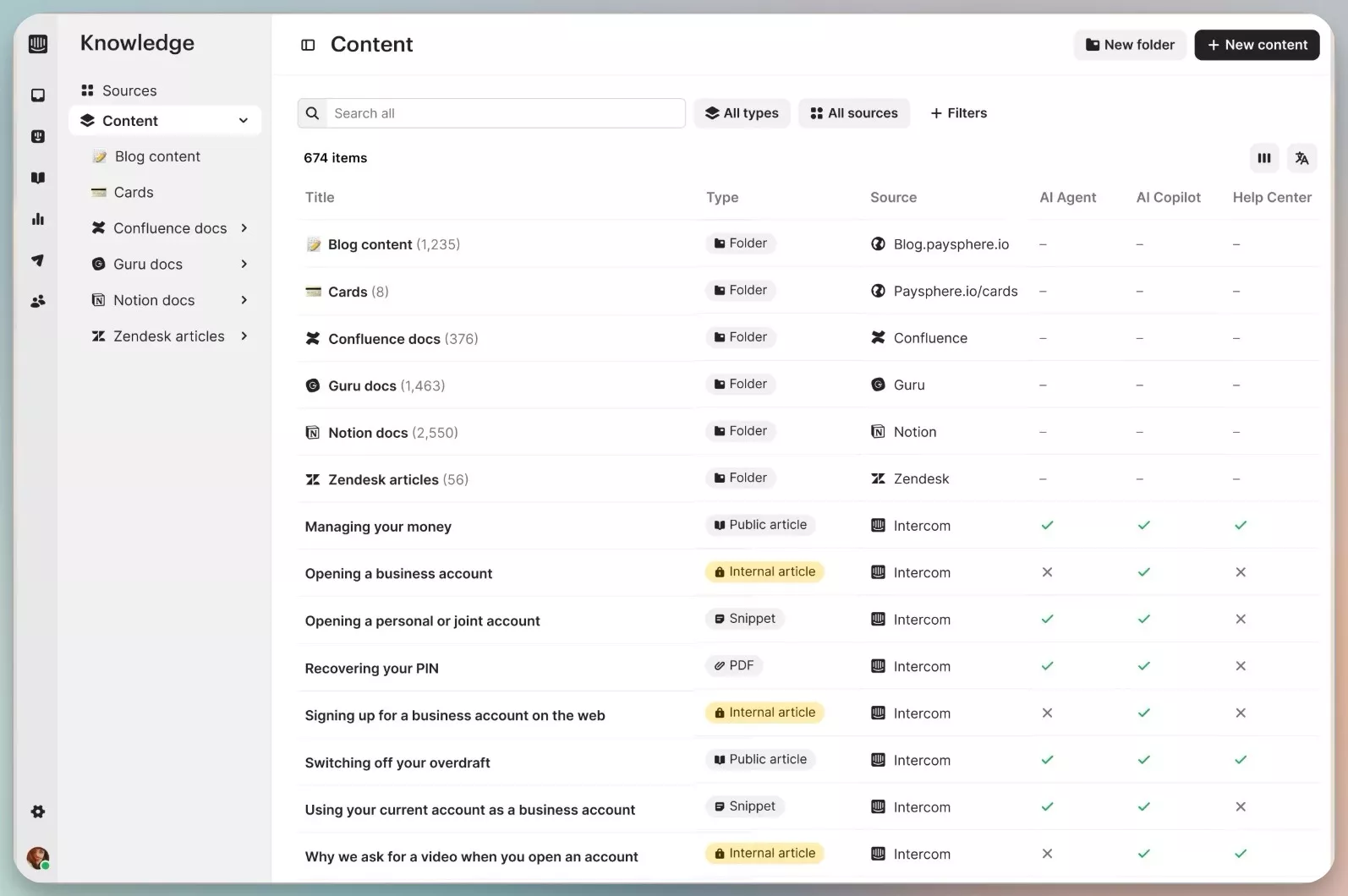

Prioritize integrations over feature lists

Most platforms look similar on paper, but real value comes from where they can pull information from.

Important considerations:

- Does it ingest from tools you actually use (Confluence, Google Docs, Notion, Slack, Git repos, ticketing tools)?

- Can it connect new sources without heavy manual cleanup?

- Does retrieval work across all connected sources, not just the internal wiki?

An AI knowledge base is only as strong as the data it can access.

Think about admin experience and maintenance load

Support teams don’t need another heavy system to manage. The right AI-native platform reduces overhead instead of adding to it.

Evaluate:

- How easy is it to update content?

- Does the system automate tagging, categorization, and organization?

- How much manual cleanup is needed to keep answers reliable?

- Can non-technical teams manage it without vendor dependency?

A good AI knowledge base should reduce operational load, not turn documentation into a part-time job.

Further Reading: A detailed guide on how to create the best B2B AI Knowledge Base -->

AI Tools for Automating Knowledge Base Creation and Delivery

AI-powered knowledge base tools automate both content creation and delivery by ingesting existing docs, tickets, and conversations, then continuously updating answers based on new data. The best tools don’t just index content, they surface the right answers contextually across chat, helpdesk, and collaboration tools, reducing manual maintenance and stale documentation.

Step-by-Step Guide to Getting Started with an AI Knowledge Base

Implementing an AI-native knowledge base isn’t about adding another tool, it’s about reorganizing your knowledge so AI can reliably operate on top of it. This framework helps teams move from scattered documentation to a retrieval-ready system that supports automation across channels.

Step 1: Audit what you already know (and what you don’t)

Begin by mapping your existing knowledge surface area: help center content, internal docs, Slack threads, legacy PDFs, troubleshooting guides, and agent notes. Identify what’s accurate, what’s duplicated, and what’s missing entirely.

Key outcomes at this stage:

- A clear list of canonical sources

- Known gaps (pricing changes, broken workflows, outdated policies)

- A view of where knowledge currently “lives” across tools

The goal isn’t perfection; it’s transparency. AI performs best when you know the boundaries of your knowledge.

Step 2: Define success metrics that AI can actually improve

Ambiguous goals slow implementations down. Set measurable outcomes tied to your real support constraints.

Examples:

- Reduce average handling time by X%

- Deflect Y% of repetitive questions

- Increase first-contact resolution for specific categories

- Improve agent response accuracy or consistency

- Reduce time spent searching for internal answers

These metrics will guide how you structure content, configure retrieval, and measure impact after rollout.

Step 3: Import, clean, and connect your existing documentation

AI-native knowledge bases work best when they can ingest all relevant knowledge, not just the parts stored in a single wiki. Connect sources like Confluence, Google Docs, Notion, Git repos, and Slack archives. We need to remember that knowledge management plays a key role in enabling better team collaboration.

Then streamline what gets pulled in:

- Remove duplicates

- Fix glaring inconsistencies

- Break long articles into retrieval-friendly chunks

- Add metadata where needed

- Tag content owners for ongoing upkeep

This is where retrieval quality is shaped. Clean, connected knowledge directly leads to better AI answers.

Step 4: Train AI agents to align with your knowledge patterns

Once content is unified, configure how the AI should behave. This includes:

- Setting tone and response rules

- Choosing which sources are authoritative

- Defining escalation logic to human agents

- Teaching the model product terminology and edge-case workflows

- Establishing which content requires strict citations

AI agents learn your patterns quickly when they have a consistent source of truth and guardrails for how to use it.

Step 5: Roll out to support teams and measure real impact

Don’t launch everywhere at once. Start with a subset of channels: Slack, Teams, any of your comms platforms or your help center, then expand.

During rollout, track:

- Deflection rate

- Resolution accuracy

- Average handling time

- Agent adoption and confidence

- Questions the AI couldn’t answer (knowledge gaps)

Use these signals to refine your content and improve retrieval. Within weeks, you’ll see clearer patterns of what needs updating and how agents rely on the system.

Lear about all the Knowledge Base integrations provided by Enjo -->

The goal is a closed feedback loop where each answered question strengthens the next.

What is AI Knowledge Base Software in 2026?

AI knowledge base software going into 2026 goes beyond hosting articles. It acts as a retrieval system that understands questions, pulls the right information, and delivers verified answers across every support channel.

The shift is simple: instead of forcing people to search, AI assembles the answer for them. This is driven by two realities:

- Enterprise knowledge is spread across too many tools, and

- Modern AI models need structured, high-quality information to perform reliably.

As a result, the knowledge base has become the intelligence layer that powers AI agents, reduces ticket load, and keeps organizational knowledge consistent.

Detailed Guide on: AI Knowledge Base -->

How AI Knowledge Bases Work Behind the Scenes

Modern AI knowledge bases combine three core components:

- Retrieval engine: Uses semantic search and vector indexing to understand intent, not just keywords.

- Reasoning layer: Generates answers grounded in specific, cited content rather than guessing.

- Freshness and consistency checks: Automatically flags outdated, conflicting, or missing information.

Together, they turn documentation into a dependable source of truth for both humans and AI systems.

Why 2026 Models Demand Better Information Architecture

LLMs are more powerful in 2026, but they’re also more sensitive to messy knowledge. Poor structure leads directly to unreliable answers.

Key requirements:

- Clear chunking and metadata so retrieval engines surface the right sections

- Consistent terminology across teams and documents

- Reduced duplication and cleaner version control

- Connected sources instead of scattered files across tools

Strong information architecture is now the primary driver of AI answer quality.

AI-Native vs AI-Assisted: The Real Distinction

AI-Assisted Knowledge Bases

Traditional systems with AI features added on top. They help teams search and maintain content but don’t fundamentally change support workflows.

Traits: Manual structuring, limited unification across tools, and moderate impact on automation.

AI-Native Knowledge Bases

Built to power AI from the ground up. Retrieval-first, multi-source ingestion, automated content validation, and answer generation with guardrails.

Traits: Reliable AI agents, unified knowledge, and documentation that actively drives automation.

Detailed Reading on AI Native vs AI Assisted here -->

Why Support and Ops Teams are Moving to AI-Native Knowledge Bases

Support and operations teams are shifting to AI-native knowledge bases because traditional systems can’t keep up with rising ticket volume, fragmented information, and the expectations created by modern AI agents. AI-native platforms turn documentation into an operational engine: they retrieve, assemble, and verify answers automatically, reducing manual effort across every channel.

This shift isn’t about “adding AI.” It’s about building knowledge in a way AI can reliably use.

Reduce resolution times with retrieval-aware answers

AI-native systems understand the intent behind a question and retrieve only the relevant parts of your knowledge, not an entire article. This produces faster, more accurate responses for both customers and agents. Instead of digging through docs or escalating to senior staff, the answer arrives instantly, grounded in trusted content.

The result: shorter queues, fewer back-and-forth messages, and far less variation in agent performance.

Enable self-service that doesn’t break under edge cases

Most self-service tools fail when customers phrase questions unexpectedly or ask something not covered in a perfectly structured help article. Retrieval-aware systems handle this better. They map the question to the closest matching knowledge, assemble an answer, and cite the underlying source.

Even edge cases, partial questions, unfamiliar terminology, long-tail issues are handled more gracefully. Self-service becomes dependable instead of risky.

Automate content creation, tagging, and upkeep

AI-native platforms don’t just answer questions, they help maintain the knowledge itself. They auto-tag documents, suggest missing articles, detect contradictions, and flag outdated content based on product changes or new ticket patterns.

Instead of periodic documentation “cleanups,” teams get continuous maintenance with far less manual effort.

Scale support without scaling headcount

As ticket volume grows, legacy knowledge bases force teams to add seats or rely on more manual triage. AI-native systems invert that dynamic. Retrieval, reasoning, and verification happen automatically, allowing AI agents and assisted workflows to resolve a larger share of incoming issues.

Support teams can absorb growth without constant headcount increases, improving both cost predictability and team efficiency.

Improve agent confidence with verified responses

AI-native systems generate answers with visible citations and source references, giving agents confidence that the AI is pulling from approved, up-to-date content. This eliminates the uncertainty that comes from hallucinated or outdated answers.

New agents onboard faster, experienced agents rely less on tribal knowledge, and overall accuracy improves.

The 7 Best AI Knowledge Base Platforms for 2026

Enjo AI

Best for: IT/Ops teams (internal) and Customer Support orgs that want to layer AI automation over Slack, Teams, and WebChat without paying "per-seat" penalties for human agents.

Top AI strengths: Instant Knowledge Sync (auto-ingests Notion, Confluence, Website URLs), "Actionable" AI (triggers workflows), and unified conversational support across Web & Chat apps.

Key caveats: Core plans include strict quotas on monthly AI replies and Knowledge Blocks, requiring close monitoring for high-volume operations;

Pricing: Disruptive model. Free Tier available; Starter $95/mo; Standard $490/mo.

Crucial nuance: Plans include unlimited human agent seats and unlimited AI agents/channels.

Buyer fit: Pick Enjo if you hate per-agent pricing and want an AI layer that instantly turns your existing Confluence/Notion docs into a support bot on Slack and Web.

G2 rating: 4.8/5

Intercom

Best for: Product-led SaaS and e-commerce teams that want a single inbox for chat, email, in-app messaging and AI-driven customer conversations.

Top AI strengths: Fin AI agent (autonomous convo resolution), knowledge sourcing from help docs, seamless AI→human handoffs.

Key caveats: Usage-based AI pricing can spike unexpectedly; vendor lock-in for KB content; seat + AI costs stack.

Pricing: Helpdesk seats $29–$132/seat/mo + $0.99 per AI resolution (min. usage thresholds).

Buyer fit: Pick Intercom if you want an all-in-one product comms platform and can forecast/absorb per-resolution costs.

G2 rating: 4.5/5

Extended Reading: Best Intercom Alternatives for Customer Service Automation

Zendesk

Best for: Enterprises and large multi-team support orgs that need a full support suite (tickets, KB, chat, analytics) with AI across channels.

Top AI strengths: AI agents & copilots, generative search (natural-language), stale-content detection, omnichannel AI.

Key caveats: Knowledge tends to be siloed inside Zendesk; automation can feel rigid; setup and tuning are resource-intensive.

Pricing: Quote-based; expect enterprise tiers in the ~$100–$150+/seat/mo range for advanced AI features. AI Flows comes with add-on - Zendesk Copilot which is $50/agent for a month.

Buyer fit: Pick Zendesk if you’re an enterprise already in that ecosystem and have the team to manage a complex platform.

G2 rating: 4.3/5

Ada

Best for: Large enterprises and mid-market teams focused on ticket deflection, high conversation volumes, and transaction flows (payments, bookings).

Top AI strengths: Strong NLP Reasoning Engine, in-chat transactions, 50+ language support, prebuilt automation playbooks.

Key caveats: Opaque, high custom pricing; long sales/onboarding cycles; needs substantial historical data to perform well.

Pricing: Custom quotes; rough annual ranges widely reported from ~$4k to $70k+ for enterprise deployments.

Buyer fit: Pick Ada if you need global, transactional bots and can commit to a long, high-touch deployment.

G2 Rating: 4.6/5

Forethought

Best for: Large enterprises that need AI for ticket triage, intelligent routing, and automated deflection at scale.

Top AI strengths: Advanced triage and routing, custom intent models, KB gap detection, auto-generated automation flows.

Key caveats: Usage/deflection-based pricing penalizes success; heavy setup/tuning; needs ~20k tickets for best results.

Pricing: Quote-based; median annual cost examples around ~$74k (varies by volume & features).

Buyer fit: Pick Forethought if you have huge ticket volume, data to train custom models, and a team to manage AI ops.

G2 Rating: 4.3/5

Document360

Best for: Companies with large product documentation libraries that need AI-assisted authoring and search (SaaS, product teams, developer docs).

Top AI strengths: Eddy AI writer/search, SEO suggestions, multilingual auto-translate, integrations to ticketing tools.

Key caveats: AI features locked behind higher tiers; credit-based prompts can cause surprise costs; knowledge is siloed inside Document360.

Pricing: Plan tiers by quote; Business/Enterprise include AI features and integrations; credits included per plan.

Buyer fit: Pick Document360 if documentation is your product and you’ll pay for pro AI writing/search features.

G2 rating: 4.7/5

Guru

Best for: Mid→large orgs focused on internal knowledge and answers delivered inside daily tools (Slack, Teams, browser).

Top AI strengths: In-workflow AI answers, personalized results, strong HR/HRIS integrations, content verification tools.

Key caveats: Per-user pricing scales with headcount; 10-user minimum; rigid card structure can feel limiting for unusual workflows.

Pricing: ~$25/user/mo (annual billing), 10-user minimum; enterprise pricing custom.

Buyer fit: Pick Guru for internal enablement when you want answers surfaced where people already work.

G2 rating: 4.7/5

Slite

Best for: Small-to-medium teams that want an intuitive, lightweight wiki with built-in AI search (“Ask”) and clean UX.

Top AI strengths: Natural-language Ask across docs + some external sources, knowledge gap detection, Chrome extension and Slack links.

Key caveats: Per-user pricing scales with headcount; Ask limited on free tiers; integrations and API are weaker than enterprise tools.

Pricing: Free tier available; Standard ~$8/user/mo; Premium ~$16/user/mo; Enterprise custom.

Buyer fit: Pick Slite if you want a nimble, modern knowledge workspace for small teams and easy adoption.

G2 rating: 4.7/5

How to Choose the Right AI Knowledge Base in 2026

With dozens of AI tools claiming to “power support,” the real challenge is separating systems that improve answer quality from those that simply bolt AI onto old workflows. The right AI knowledge base should strengthen retrieval, unify information, and reduce operational overhead and not create another silo.

Use the following criteria to evaluate platforms meaningfully.

Evaluate retrieval quality, not just search

Legacy tools still optimize for search: keywords, article ranking, and filters. AI-native tools optimize for retrieval: understanding intent, pulling precise snippets, and generating answers grounded in source content.

What to test:

- Does the system retrieve the right 5–10% of an article or dump the whole document?

- Can it disambiguate similar queries (“reset password” vs “reset API token”)?

- Does every answer include citations?

If retrieval is weak, everything downstream, agent assist, automation, AI agents — breaks.

Consider your current support channels (Slack, Teams, or any internal platform)

Your knowledge base should meet users where the work happens. In 2026, support no longer lives in a single help desk. Teams rely on Slack threads, Teams channels, and shared documents.

Questions to ask:

- Can the AI surface answers directly inside Slack or Teams?

- Does retrieval work consistently across chat, and web?

- Can agents access verified answers without switching tools?

A knowledge base that only works inside its own UI is already outdated.

More Reading: Using Microsoft Teams as a Knowledge Base -->

Look for real-time content verification

Documentation decays quickly - product changes, pricing updates, new edge cases. Without continuous validation, AI outputs drift and accuracy erodes.

Key signals of a mature system:

- Automated stale-content detection

- Alerts for conflicting or duplicate documents

- Version tracking tied to product releases

- Confidence scoring for retrieved answers

Verification is the difference between “fast answers” and “fast wrong answers.”

Prioritize integrations over feature lists

Most platforms look similar on paper, but real value comes from where they can pull information from.

Important considerations:

- Does it ingest from tools you actually use (Confluence, Google Docs, Notion, Slack, Git repos, ticketing tools)?

- Can it connect new sources without heavy manual cleanup?

- Does retrieval work across all connected sources, not just the internal wiki?

An AI knowledge base is only as strong as the data it can access.

Think about admin experience and maintenance load

Support teams don’t need another heavy system to manage. The right AI-native platform reduces overhead instead of adding to it.

Evaluate:

- How easy is it to update content?

- Does the system automate tagging, categorization, and organization?

- How much manual cleanup is needed to keep answers reliable?

- Can non-technical teams manage it without vendor dependency?

A good AI knowledge base should reduce operational load, not turn documentation into a part-time job.

Further Reading: A detailed guide on how to create the best B2B AI Knowledge Base -->

AI Tools for Automating Knowledge Base Creation and Delivery

Step-by-Step Guide to Getting Started with an AI Knowledge Base

Implementing an AI-native knowledge base isn’t about adding another tool, it’s about reorganizing your knowledge so AI can reliably operate on top of it. This framework helps teams move from scattered documentation to a retrieval-ready system that supports automation across channels.

Step 1: Audit what you already know (and what you don’t)

Begin by mapping your existing knowledge surface area: help center content, internal docs, Slack threads, legacy PDFs, troubleshooting guides, and agent notes. Identify what’s accurate, what’s duplicated, and what’s missing entirely.

Key outcomes at this stage:

- A clear list of canonical sources

- Known gaps (pricing changes, broken workflows, outdated policies)

- A view of where knowledge currently “lives” across tools

The goal isn’t perfection; it’s transparency. AI performs best when you know the boundaries of your knowledge.

Step 2: Define success metrics that AI can actually improve

Ambiguous goals slow implementations down. Set measurable outcomes tied to your real support constraints.

Examples:

- Reduce average handling time by X%

- Deflect Y% of repetitive questions

- Increase first-contact resolution for specific categories

- Improve agent response accuracy or consistency

- Reduce time spent searching for internal answers

These metrics will guide how you structure content, configure retrieval, and measure impact after rollout.

Step 3: Import, clean, and connect your existing documentation

AI-native knowledge bases work best when they can ingest all relevant knowledge, not just the parts stored in a single wiki. Connect sources like Confluence, Google Docs, Notion, Git repos, and Slack archives. We need to remember that knowledge management plays a key role in enabling better team collaboration.

Then streamline what gets pulled in:

- Remove duplicates

- Fix glaring inconsistencies

- Break long articles into retrieval-friendly chunks

- Add metadata where needed

- Tag content owners for ongoing upkeep

This is where retrieval quality is shaped. Clean, connected knowledge directly leads to better AI answers.

Step 4: Train AI agents to align with your knowledge patterns

Once content is unified, configure how the AI should behave. This includes:

- Setting tone and response rules

- Choosing which sources are authoritative

- Defining escalation logic to human agents

- Teaching the model product terminology and edge-case workflows

- Establishing which content requires strict citations

AI agents learn your patterns quickly when they have a consistent source of truth and guardrails for how to use it.

Step 5: Roll out to support teams and measure real impact

Don’t launch everywhere at once. Start with a subset of channels: Slack, Teams, any of your comms platforms or your help center, then expand.

During rollout, track:

- Deflection rate

- Resolution accuracy

- Average handling time

- Agent adoption and confidence

- Questions the AI couldn’t answer (knowledge gaps)

Use these signals to refine your content and improve retrieval. Within weeks, you’ll see clearer patterns of what needs updating and how agents rely on the system.

Lear about all the Knowledge Base integrations provided by Enjo -->

The goal is a closed feedback loop where each answered question strengthens the next.

AI Knowledge Base - FAQs

How long does it take to deploy an AI-native knowledge base?

Typically 2 to 6 weeks, depending on how scattered your documentation is. Ingestion is fast; cleanup and structuring take the most time. Teams with organized content can go live much sooner.

Do LLM-powered knowledge bases replace human support?

No. They automate repetitive, well-documented questions, not complex or judgment-heavy issues. Human agents handle nuance, exceptions, and escalations; AI handles everything predictable.

What are the best AI-driven customer support knowledge bases?

The best AI-driven customer support knowledge bases combine real-time retrieval, automated updates from tickets, and contextual delivery across chat and helpdesk tools. They focus on fast resolution, consistency, and reducing agent dependency on manual searches.

What’s the ROI of moving from traditional search to AI retrieval?

Teams usually see improvements within 30–60 days: faster resolutions, higher deflection, and less manual triage. Retrieval reduces agent effort and eliminates the performance gap between new and senior agents.

How secure are AI knowledge bases with enterprise content?

Modern systems support access controls, encryption, SSO, audit logs, and compliance standards (SOC 2, ISO 27001). AI only retrieves content users are permitted to access and never blends data across tenants.

Which companies offer the best knowledge base integrations for live answers?

Companies offering strong knowledge base integrations for live answers support real-time delivery inside tools like Slack, Microsoft Teams, and helpdesks. The most effective platforms like Enjo AI integrate deeply with workflows so answers appear during conversations, not after manual searches.

Are self-hosted LLM options viable for documentation systems?

Yes, for companies with strict data requirements and the resources to manage infrastructure. However, they’re more complex and costly to maintain. Most teams opt for hosted, enterprise-grade platforms with strong governance controls.

What is AI Knowledge Base Software in 2026?

AI knowledge base software going into 2026 goes beyond hosting articles. It acts as a retrieval system that understands questions, pulls the right information, and delivers verified answers across every support channel.

The shift is simple: instead of forcing people to search, AI assembles the answer for them. This is driven by two realities:

- Enterprise knowledge is spread across too many tools, and

- Modern AI models need structured, high-quality information to perform reliably.

As a result, the knowledge base has become the intelligence layer that powers AI agents, reduces ticket load, and keeps organizational knowledge consistent.

Detailed Guide on: AI Knowledge Base -->

How AI Knowledge Bases Work Behind the Scenes

Modern AI knowledge bases combine three core components:

- Retrieval engine: Uses semantic search and vector indexing to understand intent, not just keywords.

- Reasoning layer: Generates answers grounded in specific, cited content rather than guessing.

- Freshness and consistency checks: Automatically flags outdated, conflicting, or missing information.

Together, they turn documentation into a dependable source of truth for both humans and AI systems.

Why 2026 Models Demand Better Information Architecture

LLMs are more powerful in 2026, but they’re also more sensitive to messy knowledge. Poor structure leads directly to unreliable answers.

Key requirements:

- Clear chunking and metadata so retrieval engines surface the right sections

- Consistent terminology across teams and documents

- Reduced duplication and cleaner version control

- Connected sources instead of scattered files across tools

Strong information architecture is now the primary driver of AI answer quality.

AI-Native vs AI-Assisted: The Real Distinction

AI-Assisted Knowledge Bases

Traditional systems with AI features added on top. They help teams search and maintain content but don’t fundamentally change support workflows.

Traits: Manual structuring, limited unification across tools, and moderate impact on automation.

AI-Native Knowledge Bases

Built to power AI from the ground up. Retrieval-first, multi-source ingestion, automated content validation, and answer generation with guardrails.

Traits: Reliable AI agents, unified knowledge, and documentation that actively drives automation.

Detailed Reading on AI Native vs AI Assisted here -->

Why Support and Ops Teams are Moving to AI-Native Knowledge Bases

Support and operations teams are shifting to AI-native knowledge bases because traditional systems can’t keep up with rising ticket volume, fragmented information, and the expectations created by modern AI agents. AI-native platforms turn documentation into an operational engine: they retrieve, assemble, and verify answers automatically, reducing manual effort across every channel.

This shift isn’t about “adding AI.” It’s about building knowledge in a way AI can reliably use.

Reduce resolution times with retrieval-aware answers

AI-native systems understand the intent behind a question and retrieve only the relevant parts of your knowledge, not an entire article. This produces faster, more accurate responses for both customers and agents. Instead of digging through docs or escalating to senior staff, the answer arrives instantly, grounded in trusted content.

The result: shorter queues, fewer back-and-forth messages, and far less variation in agent performance.

Enable self-service that doesn’t break under edge cases

Most self-service tools fail when customers phrase questions unexpectedly or ask something not covered in a perfectly structured help article. Retrieval-aware systems handle this better. They map the question to the closest matching knowledge, assemble an answer, and cite the underlying source.

Even edge cases, partial questions, unfamiliar terminology, long-tail issues are handled more gracefully. Self-service becomes dependable instead of risky.

Automate content creation, tagging, and upkeep

AI-native platforms don’t just answer questions, they help maintain the knowledge itself. They auto-tag documents, suggest missing articles, detect contradictions, and flag outdated content based on product changes or new ticket patterns.

Instead of periodic documentation “cleanups,” teams get continuous maintenance with far less manual effort.

Scale support without scaling headcount

As ticket volume grows, legacy knowledge bases force teams to add seats or rely on more manual triage. AI-native systems invert that dynamic. Retrieval, reasoning, and verification happen automatically, allowing AI agents and assisted workflows to resolve a larger share of incoming issues.

Support teams can absorb growth without constant headcount increases, improving both cost predictability and team efficiency.

Improve agent confidence with verified responses

AI-native systems generate answers with visible citations and source references, giving agents confidence that the AI is pulling from approved, up-to-date content. This eliminates the uncertainty that comes from hallucinated or outdated answers.

New agents onboard faster, experienced agents rely less on tribal knowledge, and overall accuracy improves.

The 7 Best AI Knowledge Base Platforms for 2026

Enjo AI

Best for: IT/Ops teams (internal) and Customer Support orgs that want to layer AI automation over Slack, Teams, and WebChat without paying "per-seat" penalties for human agents.

Top AI strengths: Instant Knowledge Sync (auto-ingests Notion, Confluence, Website URLs), "Actionable" AI (triggers workflows), and unified conversational support across Web & Chat apps.

Key caveats: Core plans include strict quotas on monthly AI replies and Knowledge Blocks, requiring close monitoring for high-volume operations;

Pricing: Disruptive model. Free Tier available; Starter $95/mo; Standard $490/mo.

Crucial nuance: Plans include unlimited human agent seats and unlimited AI agents/channels.

Buyer fit: Pick Enjo if you hate per-agent pricing and want an AI layer that instantly turns your existing Confluence/Notion docs into a support bot on Slack and Web.

G2 rating: 4.8/5

Intercom

Best for: Product-led SaaS and e-commerce teams that want a single inbox for chat, email, in-app messaging and AI-driven customer conversations.

Top AI strengths: Fin AI agent (autonomous convo resolution), knowledge sourcing from help docs, seamless AI→human handoffs.

Key caveats: Usage-based AI pricing can spike unexpectedly; vendor lock-in for KB content; seat + AI costs stack.

Pricing: Helpdesk seats $29–$132/seat/mo + $0.99 per AI resolution (min. usage thresholds).

Buyer fit: Pick Intercom if you want an all-in-one product comms platform and can forecast/absorb per-resolution costs.

G2 rating: 4.5/5

Extended Reading: Best Intercom Alternatives for Customer Service Automation

Zendesk

Best for: Enterprises and large multi-team support orgs that need a full support suite (tickets, KB, chat, analytics) with AI across channels.

Top AI strengths: AI agents & copilots, generative search (natural-language), stale-content detection, omnichannel AI.

Key caveats: Knowledge tends to be siloed inside Zendesk; automation can feel rigid; setup and tuning are resource-intensive.

Pricing: Quote-based; expect enterprise tiers in the ~$100–$150+/seat/mo range for advanced AI features. AI Flows comes with add-on - Zendesk Copilot which is $50/agent for a month.

Buyer fit: Pick Zendesk if you’re an enterprise already in that ecosystem and have the team to manage a complex platform.

G2 rating: 4.3/5

Ada

Best for: Large enterprises and mid-market teams focused on ticket deflection, high conversation volumes, and transaction flows (payments, bookings).

Top AI strengths: Strong NLP Reasoning Engine, in-chat transactions, 50+ language support, prebuilt automation playbooks.

Key caveats: Opaque, high custom pricing; long sales/onboarding cycles; needs substantial historical data to perform well.

Pricing: Custom quotes; rough annual ranges widely reported from ~$4k to $70k+ for enterprise deployments.

Buyer fit: Pick Ada if you need global, transactional bots and can commit to a long, high-touch deployment.

G2 Rating: 4.6/5

Forethought

Best for: Large enterprises that need AI for ticket triage, intelligent routing, and automated deflection at scale.

Top AI strengths: Advanced triage and routing, custom intent models, KB gap detection, auto-generated automation flows.

Key caveats: Usage/deflection-based pricing penalizes success; heavy setup/tuning; needs ~20k tickets for best results.

Pricing: Quote-based; median annual cost examples around ~$74k (varies by volume & features).

Buyer fit: Pick Forethought if you have huge ticket volume, data to train custom models, and a team to manage AI ops.

G2 Rating: 4.3/5

Document360

Best for: Companies with large product documentation libraries that need AI-assisted authoring and search (SaaS, product teams, developer docs).

Top AI strengths: Eddy AI writer/search, SEO suggestions, multilingual auto-translate, integrations to ticketing tools.

Key caveats: AI features locked behind higher tiers; credit-based prompts can cause surprise costs; knowledge is siloed inside Document360.

Pricing: Plan tiers by quote; Business/Enterprise include AI features and integrations; credits included per plan.

Buyer fit: Pick Document360 if documentation is your product and you’ll pay for pro AI writing/search features.

G2 rating: 4.7/5

Guru

Best for: Mid→large orgs focused on internal knowledge and answers delivered inside daily tools (Slack, Teams, browser).

Top AI strengths: In-workflow AI answers, personalized results, strong HR/HRIS integrations, content verification tools.

Key caveats: Per-user pricing scales with headcount; 10-user minimum; rigid card structure can feel limiting for unusual workflows.

Pricing: ~$25/user/mo (annual billing), 10-user minimum; enterprise pricing custom.

Buyer fit: Pick Guru for internal enablement when you want answers surfaced where people already work.

G2 rating: 4.7/5

Slite

Best for: Small-to-medium teams that want an intuitive, lightweight wiki with built-in AI search (“Ask”) and clean UX.

Top AI strengths: Natural-language Ask across docs + some external sources, knowledge gap detection, Chrome extension and Slack links.

Key caveats: Per-user pricing scales with headcount; Ask limited on free tiers; integrations and API are weaker than enterprise tools.

Pricing: Free tier available; Standard ~$8/user/mo; Premium ~$16/user/mo; Enterprise custom.

Buyer fit: Pick Slite if you want a nimble, modern knowledge workspace for small teams and easy adoption.

G2 rating: 4.7/5

How to Choose the Right AI Knowledge Base in 2026

With dozens of AI tools claiming to “power support,” the real challenge is separating systems that improve answer quality from those that simply bolt AI onto old workflows. The right AI knowledge base should strengthen retrieval, unify information, and reduce operational overhead and not create another silo.

Use the following criteria to evaluate platforms meaningfully.

Evaluate retrieval quality, not just search

Legacy tools still optimize for search: keywords, article ranking, and filters. AI-native tools optimize for retrieval: understanding intent, pulling precise snippets, and generating answers grounded in source content.

What to test:

- Does the system retrieve the right 5–10% of an article or dump the whole document?

- Can it disambiguate similar queries (“reset password” vs “reset API token”)?

- Does every answer include citations?

If retrieval is weak, everything downstream, agent assist, automation, AI agents — breaks.

Consider your current support channels (Slack, Teams, or any internal platform)

Your knowledge base should meet users where the work happens. In 2026, support no longer lives in a single help desk. Teams rely on Slack threads, Teams channels, and shared documents.

Questions to ask:

- Can the AI surface answers directly inside Slack or Teams?

- Does retrieval work consistently across chat, and web?

- Can agents access verified answers without switching tools?

A knowledge base that only works inside its own UI is already outdated.

More Reading: Using Microsoft Teams as a Knowledge Base -->

Look for real-time content verification

Documentation decays quickly - product changes, pricing updates, new edge cases. Without continuous validation, AI outputs drift and accuracy erodes.

Key signals of a mature system:

- Automated stale-content detection

- Alerts for conflicting or duplicate documents

- Version tracking tied to product releases

- Confidence scoring for retrieved answers

Verification is the difference between “fast answers” and “fast wrong answers.”

Prioritize integrations over feature lists

Most platforms look similar on paper, but real value comes from where they can pull information from.

Important considerations:

- Does it ingest from tools you actually use (Confluence, Google Docs, Notion, Slack, Git repos, ticketing tools)?

- Can it connect new sources without heavy manual cleanup?

- Does retrieval work across all connected sources, not just the internal wiki?

An AI knowledge base is only as strong as the data it can access.

Think about admin experience and maintenance load

Support teams don’t need another heavy system to manage. The right AI-native platform reduces overhead instead of adding to it.

Evaluate:

- How easy is it to update content?

- Does the system automate tagging, categorization, and organization?

- How much manual cleanup is needed to keep answers reliable?

- Can non-technical teams manage it without vendor dependency?

A good AI knowledge base should reduce operational load, not turn documentation into a part-time job.

Further Reading: A detailed guide on how to create the best B2B AI Knowledge Base -->

AI Tools for Automating Knowledge Base Creation and Delivery

AI-powered knowledge base tools automate both content creation and delivery by ingesting existing docs, tickets, and conversations, then continuously updating answers based on new data. The best tools don’t just index content, they surface the right answers contextually across chat, helpdesk, and collaboration tools, reducing manual maintenance and stale documentation.

Step-by-Step Guide to Getting Started with an AI Knowledge Base

Implementing an AI-native knowledge base isn’t about adding another tool, it’s about reorganizing your knowledge so AI can reliably operate on top of it. This framework helps teams move from scattered documentation to a retrieval-ready system that supports automation across channels.

Step 1: Audit what you already know (and what you don’t)

Begin by mapping your existing knowledge surface area: help center content, internal docs, Slack threads, legacy PDFs, troubleshooting guides, and agent notes. Identify what’s accurate, what’s duplicated, and what’s missing entirely.

Key outcomes at this stage:

- A clear list of canonical sources

- Known gaps (pricing changes, broken workflows, outdated policies)

- A view of where knowledge currently “lives” across tools

The goal isn’t perfection; it’s transparency. AI performs best when you know the boundaries of your knowledge.

Step 2: Define success metrics that AI can actually improve

Ambiguous goals slow implementations down. Set measurable outcomes tied to your real support constraints.

Examples:

- Reduce average handling time by X%

- Deflect Y% of repetitive questions

- Increase first-contact resolution for specific categories

- Improve agent response accuracy or consistency

- Reduce time spent searching for internal answers

These metrics will guide how you structure content, configure retrieval, and measure impact after rollout.

Step 3: Import, clean, and connect your existing documentation

AI-native knowledge bases work best when they can ingest all relevant knowledge, not just the parts stored in a single wiki. Connect sources like Confluence, Google Docs, Notion, Git repos, and Slack archives. We need to remember that knowledge management plays a key role in enabling better team collaboration.

Then streamline what gets pulled in:

- Remove duplicates

- Fix glaring inconsistencies

- Break long articles into retrieval-friendly chunks

- Add metadata where needed

- Tag content owners for ongoing upkeep

This is where retrieval quality is shaped. Clean, connected knowledge directly leads to better AI answers.

Step 4: Train AI agents to align with your knowledge patterns

Once content is unified, configure how the AI should behave. This includes:

- Setting tone and response rules

- Choosing which sources are authoritative

- Defining escalation logic to human agents

- Teaching the model product terminology and edge-case workflows

- Establishing which content requires strict citations

AI agents learn your patterns quickly when they have a consistent source of truth and guardrails for how to use it.

Step 5: Roll out to support teams and measure real impact

Don’t launch everywhere at once. Start with a subset of channels: Slack, Teams, any of your comms platforms or your help center, then expand.

During rollout, track:

- Deflection rate

- Resolution accuracy

- Average handling time

- Agent adoption and confidence

- Questions the AI couldn’t answer (knowledge gaps)

Use these signals to refine your content and improve retrieval. Within weeks, you’ll see clearer patterns of what needs updating and how agents rely on the system.

Lear about all the Knowledge Base integrations provided by Enjo -->

The goal is a closed feedback loop where each answered question strengthens the next.

What is AI Knowledge Base Software in 2026?

AI knowledge base software going into 2026 goes beyond hosting articles. It acts as a retrieval system that understands questions, pulls the right information, and delivers verified answers across every support channel.

The shift is simple: instead of forcing people to search, AI assembles the answer for them. This is driven by two realities:

- Enterprise knowledge is spread across too many tools, and

- Modern AI models need structured, high-quality information to perform reliably.

As a result, the knowledge base has become the intelligence layer that powers AI agents, reduces ticket load, and keeps organizational knowledge consistent.

Detailed Guide on: AI Knowledge Base -->

How AI Knowledge Bases Work Behind the Scenes

Modern AI knowledge bases combine three core components:

- Retrieval engine: Uses semantic search and vector indexing to understand intent, not just keywords.

- Reasoning layer: Generates answers grounded in specific, cited content rather than guessing.

- Freshness and consistency checks: Automatically flags outdated, conflicting, or missing information.

Together, they turn documentation into a dependable source of truth for both humans and AI systems.

Why 2026 Models Demand Better Information Architecture

LLMs are more powerful in 2026, but they’re also more sensitive to messy knowledge. Poor structure leads directly to unreliable answers.

Key requirements:

- Clear chunking and metadata so retrieval engines surface the right sections

- Consistent terminology across teams and documents

- Reduced duplication and cleaner version control

- Connected sources instead of scattered files across tools

Strong information architecture is now the primary driver of AI answer quality.

AI-Native vs AI-Assisted: The Real Distinction

AI-Assisted Knowledge Bases

Traditional systems with AI features added on top. They help teams search and maintain content but don’t fundamentally change support workflows.

Traits: Manual structuring, limited unification across tools, and moderate impact on automation.

AI-Native Knowledge Bases

Built to power AI from the ground up. Retrieval-first, multi-source ingestion, automated content validation, and answer generation with guardrails.

Traits: Reliable AI agents, unified knowledge, and documentation that actively drives automation.

Detailed Reading on AI Native vs AI Assisted here -->

Why Support and Ops Teams are Moving to AI-Native Knowledge Bases

Support and operations teams are shifting to AI-native knowledge bases because traditional systems can’t keep up with rising ticket volume, fragmented information, and the expectations created by modern AI agents. AI-native platforms turn documentation into an operational engine: they retrieve, assemble, and verify answers automatically, reducing manual effort across every channel.

This shift isn’t about “adding AI.” It’s about building knowledge in a way AI can reliably use.

Reduce resolution times with retrieval-aware answers

AI-native systems understand the intent behind a question and retrieve only the relevant parts of your knowledge, not an entire article. This produces faster, more accurate responses for both customers and agents. Instead of digging through docs or escalating to senior staff, the answer arrives instantly, grounded in trusted content.

The result: shorter queues, fewer back-and-forth messages, and far less variation in agent performance.

Enable self-service that doesn’t break under edge cases

Most self-service tools fail when customers phrase questions unexpectedly or ask something not covered in a perfectly structured help article. Retrieval-aware systems handle this better. They map the question to the closest matching knowledge, assemble an answer, and cite the underlying source.

Even edge cases, partial questions, unfamiliar terminology, long-tail issues are handled more gracefully. Self-service becomes dependable instead of risky.

Automate content creation, tagging, and upkeep

AI-native platforms don’t just answer questions, they help maintain the knowledge itself. They auto-tag documents, suggest missing articles, detect contradictions, and flag outdated content based on product changes or new ticket patterns.

Instead of periodic documentation “cleanups,” teams get continuous maintenance with far less manual effort.

Scale support without scaling headcount

As ticket volume grows, legacy knowledge bases force teams to add seats or rely on more manual triage. AI-native systems invert that dynamic. Retrieval, reasoning, and verification happen automatically, allowing AI agents and assisted workflows to resolve a larger share of incoming issues.

Support teams can absorb growth without constant headcount increases, improving both cost predictability and team efficiency.

Improve agent confidence with verified responses

AI-native systems generate answers with visible citations and source references, giving agents confidence that the AI is pulling from approved, up-to-date content. This eliminates the uncertainty that comes from hallucinated or outdated answers.

New agents onboard faster, experienced agents rely less on tribal knowledge, and overall accuracy improves.

The 7 Best AI Knowledge Base Platforms for 2026

Enjo AI

Best for: IT/Ops teams (internal) and Customer Support orgs that want to layer AI automation over Slack, Teams, and WebChat without paying "per-seat" penalties for human agents.

Top AI strengths: Instant Knowledge Sync (auto-ingests Notion, Confluence, Website URLs), "Actionable" AI (triggers workflows), and unified conversational support across Web & Chat apps.

Key caveats: Core plans include strict quotas on monthly AI replies and Knowledge Blocks, requiring close monitoring for high-volume operations;

Pricing: Disruptive model. Free Tier available; Starter $95/mo; Standard $490/mo.

Crucial nuance: Plans include unlimited human agent seats and unlimited AI agents/channels.

Buyer fit: Pick Enjo if you hate per-agent pricing and want an AI layer that instantly turns your existing Confluence/Notion docs into a support bot on Slack and Web.

G2 rating: 4.8/5

Intercom

Best for: Product-led SaaS and e-commerce teams that want a single inbox for chat, email, in-app messaging and AI-driven customer conversations.

Top AI strengths: Fin AI agent (autonomous convo resolution), knowledge sourcing from help docs, seamless AI→human handoffs.

Key caveats: Usage-based AI pricing can spike unexpectedly; vendor lock-in for KB content; seat + AI costs stack.

Pricing: Helpdesk seats $29–$132/seat/mo + $0.99 per AI resolution (min. usage thresholds).

Buyer fit: Pick Intercom if you want an all-in-one product comms platform and can forecast/absorb per-resolution costs.

G2 rating: 4.5/5

Extended Reading: Best Intercom Alternatives for Customer Service Automation

Zendesk

Best for: Enterprises and large multi-team support orgs that need a full support suite (tickets, KB, chat, analytics) with AI across channels.

Top AI strengths: AI agents & copilots, generative search (natural-language), stale-content detection, omnichannel AI.

Key caveats: Knowledge tends to be siloed inside Zendesk; automation can feel rigid; setup and tuning are resource-intensive.

Pricing: Quote-based; expect enterprise tiers in the ~$100–$150+/seat/mo range for advanced AI features. AI Flows comes with add-on - Zendesk Copilot which is $50/agent for a month.

Buyer fit: Pick Zendesk if you’re an enterprise already in that ecosystem and have the team to manage a complex platform.

G2 rating: 4.3/5

Ada

Best for: Large enterprises and mid-market teams focused on ticket deflection, high conversation volumes, and transaction flows (payments, bookings).

Top AI strengths: Strong NLP Reasoning Engine, in-chat transactions, 50+ language support, prebuilt automation playbooks.

Key caveats: Opaque, high custom pricing; long sales/onboarding cycles; needs substantial historical data to perform well.

Pricing: Custom quotes; rough annual ranges widely reported from ~$4k to $70k+ for enterprise deployments.

Buyer fit: Pick Ada if you need global, transactional bots and can commit to a long, high-touch deployment.

G2 Rating: 4.6/5

Forethought

Best for: Large enterprises that need AI for ticket triage, intelligent routing, and automated deflection at scale.

Top AI strengths: Advanced triage and routing, custom intent models, KB gap detection, auto-generated automation flows.

Key caveats: Usage/deflection-based pricing penalizes success; heavy setup/tuning; needs ~20k tickets for best results.

Pricing: Quote-based; median annual cost examples around ~$74k (varies by volume & features).

Buyer fit: Pick Forethought if you have huge ticket volume, data to train custom models, and a team to manage AI ops.

G2 Rating: 4.3/5

Document360

Best for: Companies with large product documentation libraries that need AI-assisted authoring and search (SaaS, product teams, developer docs).

Top AI strengths: Eddy AI writer/search, SEO suggestions, multilingual auto-translate, integrations to ticketing tools.

Key caveats: AI features locked behind higher tiers; credit-based prompts can cause surprise costs; knowledge is siloed inside Document360.

Pricing: Plan tiers by quote; Business/Enterprise include AI features and integrations; credits included per plan.

Buyer fit: Pick Document360 if documentation is your product and you’ll pay for pro AI writing/search features.

G2 rating: 4.7/5

Guru

Best for: Mid→large orgs focused on internal knowledge and answers delivered inside daily tools (Slack, Teams, browser).

Top AI strengths: In-workflow AI answers, personalized results, strong HR/HRIS integrations, content verification tools.

Key caveats: Per-user pricing scales with headcount; 10-user minimum; rigid card structure can feel limiting for unusual workflows.

Pricing: ~$25/user/mo (annual billing), 10-user minimum; enterprise pricing custom.

Buyer fit: Pick Guru for internal enablement when you want answers surfaced where people already work.

G2 rating: 4.7/5

Slite

Best for: Small-to-medium teams that want an intuitive, lightweight wiki with built-in AI search (“Ask”) and clean UX.

Top AI strengths: Natural-language Ask across docs + some external sources, knowledge gap detection, Chrome extension and Slack links.

Key caveats: Per-user pricing scales with headcount; Ask limited on free tiers; integrations and API are weaker than enterprise tools.

Pricing: Free tier available; Standard ~$8/user/mo; Premium ~$16/user/mo; Enterprise custom.

Buyer fit: Pick Slite if you want a nimble, modern knowledge workspace for small teams and easy adoption.

G2 rating: 4.7/5

How to Choose the Right AI Knowledge Base in 2026

With dozens of AI tools claiming to “power support,” the real challenge is separating systems that improve answer quality from those that simply bolt AI onto old workflows. The right AI knowledge base should strengthen retrieval, unify information, and reduce operational overhead and not create another silo.

Use the following criteria to evaluate platforms meaningfully.

Evaluate retrieval quality, not just search

Legacy tools still optimize for search: keywords, article ranking, and filters. AI-native tools optimize for retrieval: understanding intent, pulling precise snippets, and generating answers grounded in source content.

What to test:

- Does the system retrieve the right 5–10% of an article or dump the whole document?

- Can it disambiguate similar queries (“reset password” vs “reset API token”)?

- Does every answer include citations?

If retrieval is weak, everything downstream, agent assist, automation, AI agents — breaks.

Consider your current support channels (Slack, Teams, or any internal platform)

Your knowledge base should meet users where the work happens. In 2026, support no longer lives in a single help desk. Teams rely on Slack threads, Teams channels, and shared documents.

Questions to ask:

- Can the AI surface answers directly inside Slack or Teams?

- Does retrieval work consistently across chat, and web?

- Can agents access verified answers without switching tools?

A knowledge base that only works inside its own UI is already outdated.

More Reading: Using Microsoft Teams as a Knowledge Base -->

Look for real-time content verification

Documentation decays quickly - product changes, pricing updates, new edge cases. Without continuous validation, AI outputs drift and accuracy erodes.

Key signals of a mature system:

- Automated stale-content detection

- Alerts for conflicting or duplicate documents

- Version tracking tied to product releases

- Confidence scoring for retrieved answers

Verification is the difference between “fast answers” and “fast wrong answers.”

Prioritize integrations over feature lists

Most platforms look similar on paper, but real value comes from where they can pull information from.

Important considerations:

- Does it ingest from tools you actually use (Confluence, Google Docs, Notion, Slack, Git repos, ticketing tools)?

- Can it connect new sources without heavy manual cleanup?

- Does retrieval work across all connected sources, not just the internal wiki?

An AI knowledge base is only as strong as the data it can access.

Think about admin experience and maintenance load

Support teams don’t need another heavy system to manage. The right AI-native platform reduces overhead instead of adding to it.

Evaluate:

- How easy is it to update content?

- Does the system automate tagging, categorization, and organization?

- How much manual cleanup is needed to keep answers reliable?

- Can non-technical teams manage it without vendor dependency?

A good AI knowledge base should reduce operational load, not turn documentation into a part-time job.

Further Reading: A detailed guide on how to create the best B2B AI Knowledge Base -->

AI Tools for Automating Knowledge Base Creation and Delivery

Step-by-Step Guide to Getting Started with an AI Knowledge Base

Implementing an AI-native knowledge base isn’t about adding another tool, it’s about reorganizing your knowledge so AI can reliably operate on top of it. This framework helps teams move from scattered documentation to a retrieval-ready system that supports automation across channels.

Step 1: Audit what you already know (and what you don’t)

Begin by mapping your existing knowledge surface area: help center content, internal docs, Slack threads, legacy PDFs, troubleshooting guides, and agent notes. Identify what’s accurate, what’s duplicated, and what’s missing entirely.

Key outcomes at this stage:

- A clear list of canonical sources

- Known gaps (pricing changes, broken workflows, outdated policies)

- A view of where knowledge currently “lives” across tools

The goal isn’t perfection; it’s transparency. AI performs best when you know the boundaries of your knowledge.

Step 2: Define success metrics that AI can actually improve

Ambiguous goals slow implementations down. Set measurable outcomes tied to your real support constraints.

Examples:

- Reduce average handling time by X%

- Deflect Y% of repetitive questions

- Increase first-contact resolution for specific categories

- Improve agent response accuracy or consistency

- Reduce time spent searching for internal answers

These metrics will guide how you structure content, configure retrieval, and measure impact after rollout.

Step 3: Import, clean, and connect your existing documentation

AI-native knowledge bases work best when they can ingest all relevant knowledge, not just the parts stored in a single wiki. Connect sources like Confluence, Google Docs, Notion, Git repos, and Slack archives. We need to remember that knowledge management plays a key role in enabling better team collaboration.

Then streamline what gets pulled in:

- Remove duplicates

- Fix glaring inconsistencies

- Break long articles into retrieval-friendly chunks

- Add metadata where needed

- Tag content owners for ongoing upkeep

This is where retrieval quality is shaped. Clean, connected knowledge directly leads to better AI answers.

Step 4: Train AI agents to align with your knowledge patterns

Once content is unified, configure how the AI should behave. This includes:

- Setting tone and response rules

- Choosing which sources are authoritative

- Defining escalation logic to human agents

- Teaching the model product terminology and edge-case workflows

- Establishing which content requires strict citations

AI agents learn your patterns quickly when they have a consistent source of truth and guardrails for how to use it.

Step 5: Roll out to support teams and measure real impact

Don’t launch everywhere at once. Start with a subset of channels: Slack, Teams, any of your comms platforms or your help center, then expand.

During rollout, track:

- Deflection rate

- Resolution accuracy

- Average handling time

- Agent adoption and confidence

- Questions the AI couldn’t answer (knowledge gaps)

Use these signals to refine your content and improve retrieval. Within weeks, you’ll see clearer patterns of what needs updating and how agents rely on the system.

Lear about all the Knowledge Base integrations provided by Enjo -->

The goal is a closed feedback loop where each answered question strengthens the next.

AI Knowledge Base - FAQs

How long does it take to deploy an AI-native knowledge base?

Typically 2 to 6 weeks, depending on how scattered your documentation is. Ingestion is fast; cleanup and structuring take the most time. Teams with organized content can go live much sooner.

Do LLM-powered knowledge bases replace human support?

No. They automate repetitive, well-documented questions, not complex or judgment-heavy issues. Human agents handle nuance, exceptions, and escalations; AI handles everything predictable.

What are the best AI-driven customer support knowledge bases?

The best AI-driven customer support knowledge bases combine real-time retrieval, automated updates from tickets, and contextual delivery across chat and helpdesk tools. They focus on fast resolution, consistency, and reducing agent dependency on manual searches.

What’s the ROI of moving from traditional search to AI retrieval?

Teams usually see improvements within 30–60 days: faster resolutions, higher deflection, and less manual triage. Retrieval reduces agent effort and eliminates the performance gap between new and senior agents.

How secure are AI knowledge bases with enterprise content?

Modern systems support access controls, encryption, SSO, audit logs, and compliance standards (SOC 2, ISO 27001). AI only retrieves content users are permitted to access and never blends data across tenants.

Which companies offer the best knowledge base integrations for live answers?

Companies offering strong knowledge base integrations for live answers support real-time delivery inside tools like Slack, Microsoft Teams, and helpdesks. The most effective platforms like Enjo AI integrate deeply with workflows so answers appear during conversations, not after manual searches.

Are self-hosted LLM options viable for documentation systems?

Yes, for companies with strict data requirements and the resources to manage infrastructure. However, they’re more complex and costly to maintain. Most teams opt for hosted, enterprise-grade platforms with strong governance controls.

Accelerate support with Generative AI

Stay Informed and Inspired