AI Chatbot Guide for 2026: Architecture, Use Cases, Deployment

AI chatbots now sit at the center of any organisations support. They handle customer questions on websites, create tickets in Slack or Teams, run workflows across Jira or ServiceNow, and retrieve answers from complex knowledge systems. This guide explains how a modern AI chatbot works, what architecture supports high accuracy, and how enterprises deploy chatbots responsibly across IT, HR, customer service, and operations.

TL;DR

- Modern AI chatbots combine LLM reasoning, retrieval, and deterministic workflows to resolve issues, not just answer FAQs.

- Website chatbots, Slack/Teams agents, and workflow engines all serve different support surfaces.

- Enterprise-ready bots require SSO, RBAC, audit logs, encryption, and permission-aware retrieval.

- Successful deployment follows a four-week rollout: connect channels → triage rules → surface rollout → workflow automation.

- Measurement hinges on retrieval precision, resolution rate, and workflow execution reliability.

What You’ll Learn

- How AI chatbots evolved from scripted bots into reasoning-based systems

- The architecture: LLMs, retrieval, actions, memory, and security controls

- Differences between website chatbots, collaboration-native agents, and automation engines

- Use cases across IT, HR, finance, and customer-facing support

- How to integrate a chatbot with Jira, ServiceNow, Confluence, and SharePoint

- A four-week deployment plan with actionable steps

- A measurement framework for accuracy, governance, and model drift

- Where Enjo fits as the unified AI support layer for web + Slack/Teams

What is an AI Chatbot

An AI chatbot is a system that can understand natural language, retrieve accurate information from connected knowledge sources, and perform structured actions such as creating tickets, triggering workflows, or updating records in enterprise tools. Unlike traditional rule-based bots, modern AI chatbots combine LLM reasoning, real-time retrieval, and deterministic execution to resolve issues end-to-end across surfaces like websites, Slack, and Microsoft Teams.

From rule-based scripts to reasoning-based LLM systems

Early chatbots followed strict trees: if user says X, respond with Y. These systems broke easily, required manual scripting, and couldn’t interpret natural language. They were useful only for predictable FAQs.

Today’s AI chatbots use large language models (LLMs) that:

- Parse intent from open-ended questions

- Reason through multi-step instructions

- Contextualize responses based on prior messages

- Adapt to unique phrasing, typos, and domain-specific jargon

But LLMs alone don’t create a reliable chatbot. Enterprises need structured retrieval, deterministic workflows, and guardrails.

A modern AI chatbot is closer to a service interface, a programmable layer between humans and systems.

How AI chatbots learn: retrieval, memory, workflows

An AI chatbot does not “learn” your organization the way a model trains on a dataset. Instead, it becomes effective by connecting to:

- Retrieval sources → Confluence, SharePoint, PDFs, Notion, product docs

- Workflow systems → Jira, ServiceNow, HRIS, IAM, financial tools

- Surface channels → website, Slack, Teams, mobile app

Check out the different integrations provided by Enjo.

The intelligence emerges from three layers working together:

- Retrieval layer: Pulls the correct policy or answer in real time, using embeddings, metadata, and permission checks.

- Conversation memory: Tracks context, user identity, and the evolving problem state across a session.

- Workflow execution: Runs actions - create tickets, route approvals, reset passwords, check device health, or update records.

This blend allows the chatbot to move beyond “What is our VPN policy?” to “Create a Jira incident and attach troubleshooting logs.”

Website chatbot vs Slack/Teams agent vs workflow engine

“AI chatbot” is now a broad term covering three distinct systems:

1. Website Chatbot (customer-facing)

- Captures leads

- Answers product or policy questions

- Deflects support tickets

- Escalates unresolved issues to CRM or ITSM tools

- Ideal for high-volume customer service.

2. Slack/Teams Agent (internal support)

- Lives inside collaboration tools where employees already work

- Creates Jira/ServiceNow tickets inline

- Retrieves internal answers with permission checks

- Runs approvals or access flows

- Essential for IT and HR operations.

3. Workflow Engine (back-end automation)

- Executes deterministic multi-step tasks

- Connects actions: create → enrich → notify → record

- Ensures reliability and auditability

- Critical for enterprise-grade accuracy.

Most enterprises now combine all three surfaces to create a unified support experience. This is also where Enjo positions itself: a single intelligence layer powering web chat, Slack, Teams, and workflow automation.

Where AI agents fit in the support ecosystem

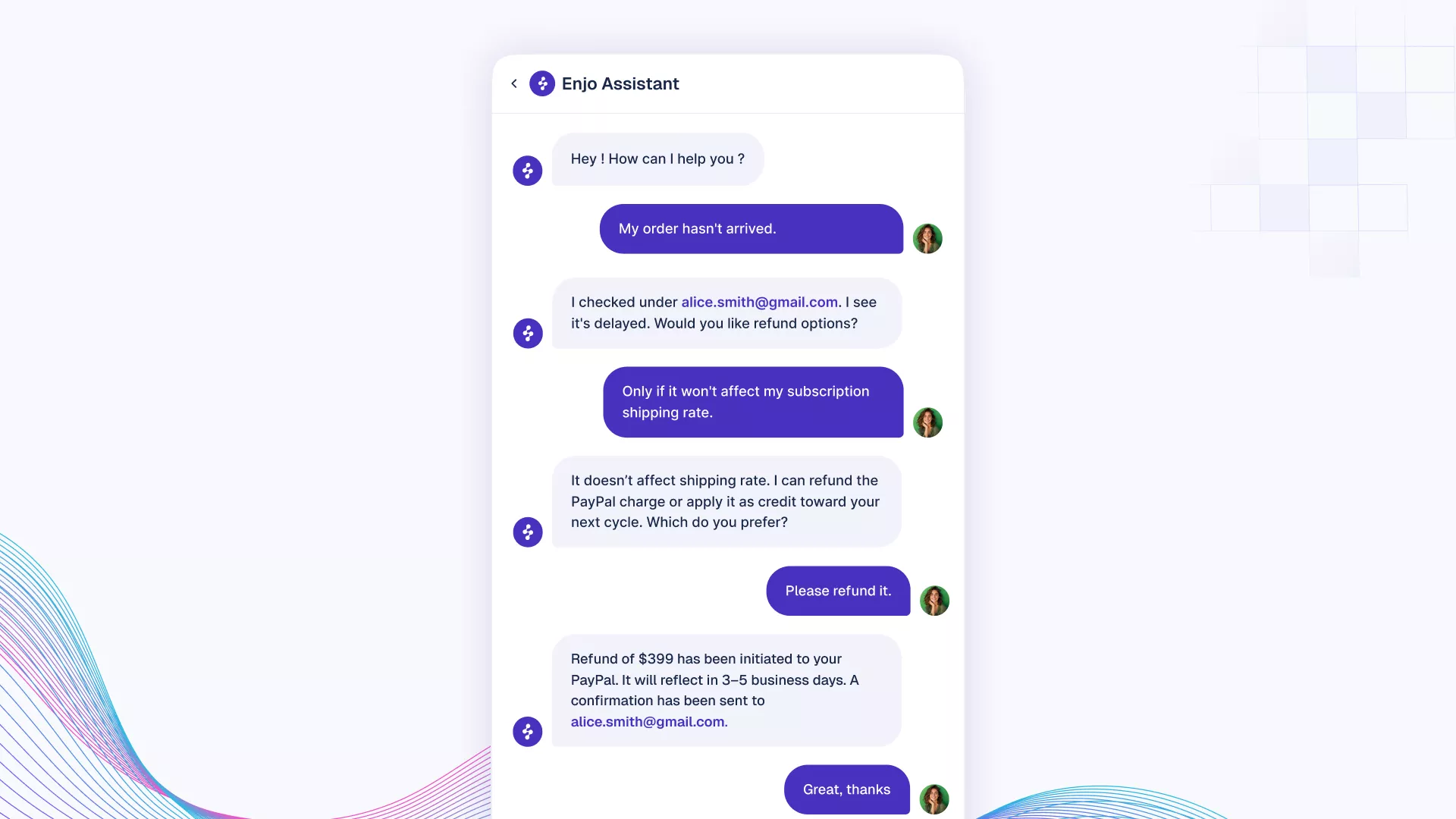

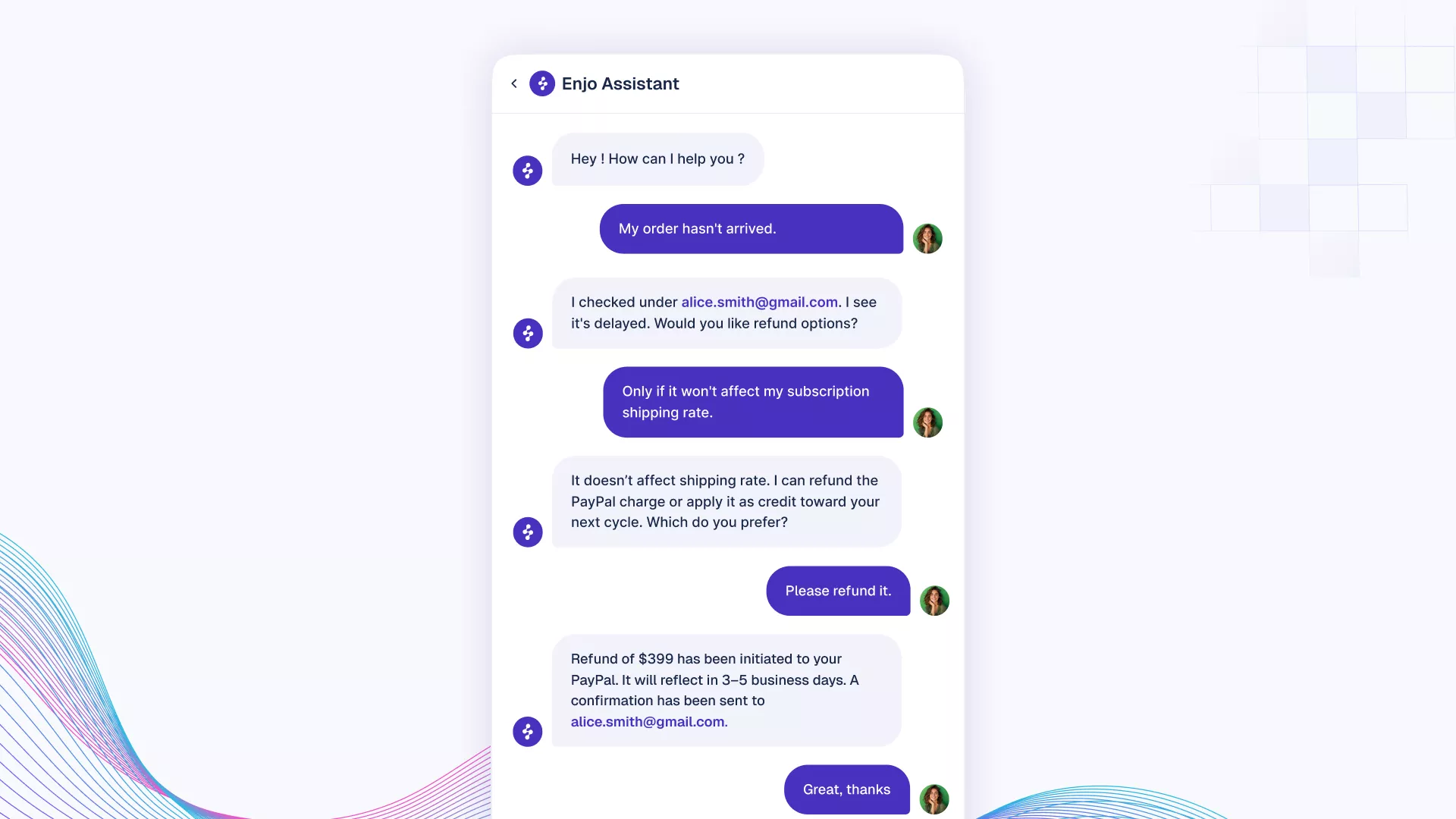

AI agents sit one layer above traditional chatbots. While a chatbot focuses on interpreting a question and returning an answer, an AI agent is designed to carry a request from intent to outcome. It interprets what the user wants, determines the sequence of steps required, executes those steps across connected systems, and verifies that each action completed successfully before reporting back.

In IT support, this means the agent doesn’t just respond with “Try resetting your VPN.” It can review diagnostic logs, infer the likely issue, trigger the appropriate workflow, create or update the Jira or ServiceNow ticket with structured context, and notify the right team if human intervention is still required. The user experiences a single conversation, but underneath, the agent is orchestrating multi-step work across multiple tools.

This is the difference between answering and resolving.

Enterprises don’t need yet another channel that repeats documentation, they need a system that turns conversational requests into coleted tasks with predictability, auditability, and minimal friction.

Enjo in Practice

Enjo’s Deflect → Triage → Resolve → Reflect framework maps directly to this evolution. It retrieves answers, creates tickets in Slack/Teams, and executes multi-step workflows with deterministic logic.

Learn more: Case Studies

The Core Architecture of an AI Chatbot

A modern AI chatbot works because multiple layers operate together. LLMs handle language. Retrieval supplies grounded facts. Deterministic execution guarantees reliable actions. Identity and session state keep conversations coherent. And the entire system sits behind enterprise-grade security.

Enterprises evaluating AI chatbots should look for all five layers. Missing any layer leads to failure modes: hallucinations, inaccurate answers, brittle handoffs, or compliance gaps.

Natural language understanding (LLMs)

LLMs interpret user messages, extract intent, and generate responses. They excel at:

- Identifying what the user wants (“reset VPN” vs. “VPN slow”)

- Parsing messy phrasing, typos, or jargon

- Rewriting or summarizing content

- Guiding troubleshooting steps

But LLMs alone aren’t enough. Without grounding, they can guess wrong or produce answers from outdated information.

The right approach is a hybrid reasoning model:

- LLM → understand the question

- Retrieval → fetch the source-of-truth

- LLM → rewrite in context

- Deterministic logic → execute tasks and verify outcomes

This pattern provides accuracy and auditability.

Retrieval layer (docs, wikis, PDFs, APIs)

Retrieval is the backbone of an enterprise chatbot.

It connects the chatbot to:

- Confluence

- SharePoint

- Notion

- PDFs and slide decks

- API-based knowledge sources

- Product documentation, logs, and policy pages

The retrieval layer needs three capabilities:

1. Structured indexing

Documents must be chunked, embedded, and tagged with metadata like permissions, product area, last updated date, and classification.

2. Permission-aware filtering

Employees should only see answers they’re allowed to see. This requires role-based filtering at query time, not static snapshots.

3. Real-time freshness

Knowledge inside an enterprise is never static. Policies get updated, workflows shift, and product documentation evolves as teams release new features. An AI chatbot must reflect these changes the moment they occur. That requires continuous indexing, freshness checks, and permission-aware retrieval, not manual uploads or periodic syncs that quickly fall out of date. This is one of the clearest dividing lines between consumer chatbots and enterprise-ready systems: the latter deliver answers based on current organizational truth, not a frozen snapshot of it.

Deterministic Execution: the missing piece

Most legacy chatbots failed not because they misunderstood questions, but because they couldn’t take action. Today’s AI chatbots need a deterministic execution engine that:

- Calls APIs reliably

- Creates and updates tickets in Jira/ServiceNow

- Triggers approval workflows

- Provisions access or starts HR tasks

- Posts updates to Slack/Teams channels

- Verifies each step with success/failure checks

Because determinism matters.

LLMs can recommend a next step, but actions must be:

- Predictable

- Auditable

- Reversible

- Logged

- Governed

This separates “AI assistant” from “AI agent.” Without deterministic execution, enterprises risk silent failures, inconsistent results, and compliance gaps.

Enjo’s approach (AI Flows) falls exactly in this category: LLM for reasoning, deterministic workflows for accuracy.

Conversation state, context windows & identity

A chatbot must track who is asking, what they asked before, and which systems they are allowed to access.

This requires:

1. Identity mapping

Slack/Teams identity ↔ internal directory ↔ permissions

This ensures correct access (e.g., finance policies for finance staff only).

2. State management

Multi-turn conversations require maintaining session memory:

- User intent

- Selected device or ticket

- Unresolved sub-steps

- Workflow progress

Without state, every message feels like starting from scratch.

3. Context window management

LLMs have token limits. A robust chatbot keeps the active conversation small but relevant—discarding noise, keeping key variables.

4. Channel-aware adaptation

- Website sessions are short.

- Slack sessions are threaded.

- Teams sessions may involve group channels.

The chatbot must behave differently on each surface.

Security stack: SSO, RBAC, audit logs, encryption

Enterprises require more than “secure by default.” They need specific controls:

1. Single Sign-On (SSO)

Integration with Okta, Azure AD, or Google Workspace ensures identity consistency across surfaces. Enjo, for example, supports Okta SSO with native session management.

2. Role-Based Access Control (RBAC)

Different roles need different access rights:

- General employees

- IT admins

- HR leaders

- workflow creators

Fine-grained RBAC prevents accidental exposure of sensitive information.

3. Audit logs

Every action must be recorded:

- Retrieval queries

- Workflow executions

- Ticket actions

- Escalations

- Admin changes

Auditability builds trust and supports compliance reviews.

4. Encryption

Data must encrypt:

- At rest

- In transit

- Across private-link/VPC deployments (where available)

5. Governance workflows

Configuration changes should follow approvals and logging. Enterprise adoption depends on proving the chatbot can operate without creating risk.

Enjo in Practice: Enjo’s architecture was built around enterprise controls: Okta SSO, granular RBAC, full audit trails, and encrypted data flows. This makes Slack/Teams-native deployment viable for large enterprises with strict governance needs.

Channels Where AI Chatbots Operate

AI chatbots don’t live in a single interface anymore. They operate as a distributed service layer across multiple surfaces, each with its own interaction model, expectations, and constraints. The most successful deployments recognize that users behave differently on a public website than they do in Slack or Teams and the chatbot must adapt accordingly.

The goal across all channels is the same: reduce friction, provide clarity, and route work into structured workflows. But the execution should feel channel-native, not generic.

Website Chatbot for Customers

A website chatbot serves two audiences simultaneously: users who want fast answers and teams who want cleaner funnels and fewer repetitive tickets. Its job is to:

- Interpret diverse customer intents

- Deliver precise answers with minimal dialogue

- Capture qualified leads without interrupting the experience

- Deflect routine support queries before they hit the helpdesk

- Escalate complex issues with complete context

Customer sessions are brief and transactional, often under 30 seconds. This places pressure on the chatbot to respond quickly, avoid unnecessary clarifications, and maintain a consistent tone aligned with brand trust.

What distinguishes effective website chatbots:

They combine retrieval (docs, FAQs, pricing) with routing logic (“book demo,” “start trial,” “connect to support”). They also integrate event tracking so teams can measure what questions drive conversions, where users drop off, and which flows should be refined.

For implementation details: Website Chatbot: Setup, Integrations & Real-World Use Cases

Slack/Teams Agent for employees

Inside an enterprise, the collaboration platform is the real support front door. Employees already escalate issues, share screenshots, and request approvals in Slack or Teams. A well-designed chatbot steps directly into this workflow rather than forcing users to switch into a portal.

A channel-native Slack/Teams agent should:

- Retrieve internal knowledge with permission awareness

- Create and update tickets inline

- Run multi-step actions (reset access, trigger approvals)

- Maintain conversation state across threaded discussions

- Adapt its responses to the culture and pace of internal chat

The biggest value is behavioral: employees stop context-switching. Instead of abandoning a task to “check a portal,” they continue working in the same channel where the problem originated. This is where internal adoption spikes and governance complexity increases, identity mapping, RBAC, and audit logs become essential.

Deeper exploration: AI Chatbot for IT & Internal Support (Slack/Teams)

Multi-channel knowledge hub

Most enterprises have knowledge sprawl:

- Historical docs in Confluence

- Updated policies in SharePoint

- Onboarding content in Notion

- Customer docs in the CMS

- Tribal knowledge buried in Slack threads

A modern AI chatbot acts as a knowledge unification layer. It indexes content across systems, filters based on permissions, and returns a single authoritative answer regardless of where the user interacts website, Slack, Teams, or embedded widget. The impact goes beyond Q&A.

A unified retrieval layer eliminates the silent failure mode of giving different answers to different audiences. For example:

- A customer asking a pricing question on the website

- An employee asking about discount eligibility in Slack

The outputs must be consistent. This improves compliance, reduces internal confusion, and reinforces a predictable support experience.

Why Channel-Native behavior matters

Enterprises often fail with chatbots because they deploy a “one-size-fits-all” widget in places where the interaction pattern doesn’t fit. Channel-native behavior solves this mismatch.

On a website

Users want concise answers, minimal friction, and a clear next step.

A good website chatbot:

- Summarizes

- Avoids nested clarifying questions

- Provides safety rails for complex requests

- Routes users to the right CTA without sounding pushy

In Slack or Teams

Employees expect collaboration, threading, and persistence.

A good internal agent:

- Keeps state

- References earlier messages

- Runs commands inside the thread

- Adapts to the conversational style of the workspace

Within workflow execution

Deterministic logic matters more than personality.

A workflow engine:

- Verifies each step

- Logs actions for audits

- Handles retries

- Ensures correctness over creativity

Channel-native behavior is not optional; it’s foundational to trust and usability. Enterprises don’t want “a chatbot.” They want a consistent support interface that behaves intelligently in each place people work.

Enjo in Practice: Enjo uses one intelligence layer across all surfaces. The website chatbot, Slack/Teams agent, and workflow engine share the same retrieval, controls, and action execution, eliminating fragmentation and maintaining answer consistency.

Top Enterprise Use Cases Across IT, HR & Support

AI chatbots add the most value when they resolve work, not merely interpret text. Below are the use cases where enterprises consistently see impact at scale.

Self-service policy → Answer retrieval

Policy questions appear simple but consume enormous operational capacity. Employees ask variations of the same 30–40 topics, VPN access, leave policies, procurement rules, device allowances, security protocols.

A high-precision chatbot:

- Identifies the exact policy segment

- Applies permission filters

- Returns a clean, context-aware answer

- Links to authoritative documentation

- Tracks low-confidence responses for review

This reduces unnecessary escalation, raises policy adherence, and provides a measurable audit trail for compliance teams.

Ticket creation and updates

Ticketing is one of the most expensive support bottlenecks. Employees dislike portals, which means ticket data is often incomplete or delayed.

An AI chatbot solves this by turning conversation into structured work:

- Captures intent (“my VPN is failing again”)

- Attaches screenshots or logs

- Selects the correct Jira/ServiceNow issue type

- Enriches metadata automatically

- Updates the ticket as troubleshooting progresses

- Closes the ticket when resolved

What once required five steps in a portal becomes one message in chat.

HR queries, onboarding, and approvals

HR teams face repeated questions on payroll, benefits, onboarding, and documents. A chatbot reduces load and standardizes responses.

Examples:

- “What’s our parental leave policy?”

- “Where do I find my Form 16?”

- “What are the onboarding tasks for Day 1?”

For approval-heavy flows (hardware, allowances, onboarding), the chatbot orchestrates steps:

- Collect data

- Request approval

- Notify stakeholders

- Update HRIS or IT systems

HR teams regain hours each week, and employees experience more predictable support.

Troubleshooting and device support

Troubleshooting works best when a chatbot combines reasoning with deterministic actions.

Typical conversational paths:

- Understand the issue (“VPN reconnect loops”)

- Suggest verified steps (flush DNS, reset profile, check certs)

- Collect logs or device metadata

- Trigger backend checks or workflows

- Escalate with pre-filled technical context

This cuts resolution time because the chatbot handles the early diagnostic steps that L1 agents traditionally manage.

Website lead capture and customer FAQs

For customer-facing teams, the chatbot becomes a conversion tool:

- Interprets buying intent

- Answers advanced product, integration, and pricing questions

- Distinguishes between support vs sales queries

- Captures lead info

- Routes qualified users to demo or trial flows

- Logs patterns that inform product and documentation improvements

A strong website chatbot reduces friction in the buying journey while lowering the burden on customer service and sales teams.

How AI Chatbots Integrate Into Support Workflows

An AI chatbot becomes operationally valuable only when it integrates into the tools and systems that already anchor IT, HR, and customer support workflows. It should not behave like a parallel support channel. Instead, it must behave like a workflow orchestrator that interprets conversational intent, retrieves authoritative content, and executes structured actions across multiple systems. Modern enterprises converge around three integration categories: knowledge repositories, ticketing systems, and workflow engines.

Each one plays a distinct role in turning conversation into actionable, traceable work.

This integrated stack transforms the chatbot from a Q&A tool into a support interface that employees trust.

Multi-step flows (Agentic Behaviour)

Agentic behavior is often misunderstood as “Autonomous AI.”

Enterprise-grade agentic behavior is much more practical: the chatbot decomposes a request into steps, executes them in order, verifies outcomes, and maintains a complete audit trail.

A well-governed agentic flow has characteristics such as:

- Clear preconditions (“user must exist in HRIS”)

- Deterministic transitions (“if approved → provision access”)

- Built-in error recovery (“retry API call,” “fallback to human escalation”)

- User-facing transparency (“Step 3 completed → waiting on approver”)

This model replaces legacy processes where a single access request triggers a week of back-and-forth messages across email and tickets.

Decision Framework

Automation must follow predictable rules. Handoffs must cover ambiguity or risk.

This structured approach prevents “over-automation,” one of the most common deployment failures.

Governance and accuracy tuning

Accuracy doesn’t remain stable on its own. Enterprise chatbots require continuous governance to maintain reliability across evolving policies, workflows, and integration dependencies.

Instead of long lists, here’s a more useful operational view:

Want to test how an AI chatbot performs inside Slack or Teams with real tickets and workflows: Start a free Enjo trial — deploy in minutes.

FAQ

1. What is an AI chatbot?

An AI chatbot is a system that interprets natural language, retrieves information from connected knowledge sources, and performs structured actions. Modern enterprise chatbots combine LLM reasoning, retrieval, and workflow execution so they can resolve tasks (ticket creation, approvals, troubleshooting) across tools like Jira, ServiceNow, Confluence, and SharePoint. They operate across multiple surfaces such as websites, Slack, and Microsoft Teams.

2. Which AI chatbot is best for enterprise support?

The best AI chatbot depends on your environment and use case. For support workflows, the most effective systems integrate deeply with collaboration tools (Slack/Teams), support ticketing platforms (Jira/ServiceNow), and knowledge bases (Confluence/SharePoint). Strong governance, RBAC, audit trails, and deterministic workflows matter more than model size. Enjo fits this category with channel-native behavior and enterprise controls.

3. Is there any free AI chatbot I can try?

Most enterprise-grade solutions offer a free tier, trial, or pilot period to evaluate retrieval accuracy, action reliability, and workflow execution. Enjo provides a free trial so teams can test real flows inside Slack/Teams without engineering setup: Signup today

4. What can an AI chatbot automate in IT, HR, or customer support?

AI chatbots automate predictable, rule-driven tasks such as policy answers, ticket triage, device troubleshooting, onboarding steps, access provisioning, and multi-step approval flows. They also serve customer-facing needs like product FAQs, pricing questions, and lead qualification. Tasks requiring human judgment or ambiguous interpretation should escalate to human teams.

5. How do I deploy an AI chatbot securely?

Secure deployment requires SSO integration (e.g., Okta), RBAC to control access, encrypted data flows, and full audit logs of retrieval and actions. Enterprises should also review content freshness, workflow success rate, and permission mapping monthly to prevent drift or incorrect responses.

6. How do I measure whether my AI chatbot is performing well?

The most reliable indicators are:

- Retrieval precision (correctness of sourced answers)

- Resolution rate (issues solved end-to-end)

- Action success rate (workflow reliability)

- Deflection rate (reduction in tickets)

- Governance metrics (fallback accuracy, escalation quality)

7. Do I need to train or fine-tune a model?

Not usually. Modern chatbots rely on retrieval and deterministic workflows rather than model training. Connecting knowledge sources, implementing clear triage rules, and defining workflow logic deliver more accuracy than fine-tuning. Enterprises refine content, not models.

TL;DR

- Modern AI chatbots combine LLM reasoning, retrieval, and deterministic workflows to resolve issues, not just answer FAQs.

- Website chatbots, Slack/Teams agents, and workflow engines all serve different support surfaces.

- Enterprise-ready bots require SSO, RBAC, audit logs, encryption, and permission-aware retrieval.

- Successful deployment follows a four-week rollout: connect channels → triage rules → surface rollout → workflow automation.

- Measurement hinges on retrieval precision, resolution rate, and workflow execution reliability.

What You’ll Learn

- How AI chatbots evolved from scripted bots into reasoning-based systems

- The architecture: LLMs, retrieval, actions, memory, and security controls

- Differences between website chatbots, collaboration-native agents, and automation engines

- Use cases across IT, HR, finance, and customer-facing support

- How to integrate a chatbot with Jira, ServiceNow, Confluence, and SharePoint

- A four-week deployment plan with actionable steps

- A measurement framework for accuracy, governance, and model drift

- Where Enjo fits as the unified AI support layer for web + Slack/Teams

What is an AI Chatbot

An AI chatbot is a system that can understand natural language, retrieve accurate information from connected knowledge sources, and perform structured actions such as creating tickets, triggering workflows, or updating records in enterprise tools. Unlike traditional rule-based bots, modern AI chatbots combine LLM reasoning, real-time retrieval, and deterministic execution to resolve issues end-to-end across surfaces like websites, Slack, and Microsoft Teams.

From rule-based scripts to reasoning-based LLM systems

Early chatbots followed strict trees: if user says X, respond with Y. These systems broke easily, required manual scripting, and couldn’t interpret natural language. They were useful only for predictable FAQs.

Today’s AI chatbots use large language models (LLMs) that:

- Parse intent from open-ended questions

- Reason through multi-step instructions

- Contextualize responses based on prior messages

- Adapt to unique phrasing, typos, and domain-specific jargon

But LLMs alone don’t create a reliable chatbot. Enterprises need structured retrieval, deterministic workflows, and guardrails.

A modern AI chatbot is closer to a service interface, a programmable layer between humans and systems.

How AI chatbots learn: retrieval, memory, workflows

An AI chatbot does not “learn” your organization the way a model trains on a dataset. Instead, it becomes effective by connecting to:

- Retrieval sources → Confluence, SharePoint, PDFs, Notion, product docs

- Workflow systems → Jira, ServiceNow, HRIS, IAM, financial tools

- Surface channels → website, Slack, Teams, mobile app

Check out the different integrations provided by Enjo.

The intelligence emerges from three layers working together:

- Retrieval layer: Pulls the correct policy or answer in real time, using embeddings, metadata, and permission checks.

- Conversation memory: Tracks context, user identity, and the evolving problem state across a session.

- Workflow execution: Runs actions - create tickets, route approvals, reset passwords, check device health, or update records.

This blend allows the chatbot to move beyond “What is our VPN policy?” to “Create a Jira incident and attach troubleshooting logs.”

Website chatbot vs Slack/Teams agent vs workflow engine

“AI chatbot” is now a broad term covering three distinct systems:

1. Website Chatbot (customer-facing)

- Captures leads

- Answers product or policy questions

- Deflects support tickets

- Escalates unresolved issues to CRM or ITSM tools

- Ideal for high-volume customer service.

2. Slack/Teams Agent (internal support)

- Lives inside collaboration tools where employees already work

- Creates Jira/ServiceNow tickets inline

- Retrieves internal answers with permission checks

- Runs approvals or access flows

- Essential for IT and HR operations.

3. Workflow Engine (back-end automation)

- Executes deterministic multi-step tasks

- Connects actions: create → enrich → notify → record

- Ensures reliability and auditability

- Critical for enterprise-grade accuracy.

Most enterprises now combine all three surfaces to create a unified support experience. This is also where Enjo positions itself: a single intelligence layer powering web chat, Slack, Teams, and workflow automation.

Where AI agents fit in the support ecosystem

AI agents sit one layer above traditional chatbots. While a chatbot focuses on interpreting a question and returning an answer, an AI agent is designed to carry a request from intent to outcome. It interprets what the user wants, determines the sequence of steps required, executes those steps across connected systems, and verifies that each action completed successfully before reporting back.

In IT support, this means the agent doesn’t just respond with “Try resetting your VPN.” It can review diagnostic logs, infer the likely issue, trigger the appropriate workflow, create or update the Jira or ServiceNow ticket with structured context, and notify the right team if human intervention is still required. The user experiences a single conversation, but underneath, the agent is orchestrating multi-step work across multiple tools.

This is the difference between answering and resolving.

Enterprises don’t need yet another channel that repeats documentation, they need a system that turns conversational requests into coleted tasks with predictability, auditability, and minimal friction.

Enjo in Practice

Enjo’s Deflect → Triage → Resolve → Reflect framework maps directly to this evolution. It retrieves answers, creates tickets in Slack/Teams, and executes multi-step workflows with deterministic logic.

Learn more: Case Studies

The Core Architecture of an AI Chatbot

A modern AI chatbot works because multiple layers operate together. LLMs handle language. Retrieval supplies grounded facts. Deterministic execution guarantees reliable actions. Identity and session state keep conversations coherent. And the entire system sits behind enterprise-grade security.

Enterprises evaluating AI chatbots should look for all five layers. Missing any layer leads to failure modes: hallucinations, inaccurate answers, brittle handoffs, or compliance gaps.

Natural language understanding (LLMs)

LLMs interpret user messages, extract intent, and generate responses. They excel at:

- Identifying what the user wants (“reset VPN” vs. “VPN slow”)

- Parsing messy phrasing, typos, or jargon

- Rewriting or summarizing content

- Guiding troubleshooting steps

But LLMs alone aren’t enough. Without grounding, they can guess wrong or produce answers from outdated information.

The right approach is a hybrid reasoning model:

- LLM → understand the question

- Retrieval → fetch the source-of-truth

- LLM → rewrite in context

- Deterministic logic → execute tasks and verify outcomes

This pattern provides accuracy and auditability.

Retrieval layer (docs, wikis, PDFs, APIs)

Retrieval is the backbone of an enterprise chatbot.

It connects the chatbot to:

- Confluence

- SharePoint

- Notion

- PDFs and slide decks

- API-based knowledge sources

- Product documentation, logs, and policy pages

The retrieval layer needs three capabilities:

1. Structured indexing

Documents must be chunked, embedded, and tagged with metadata like permissions, product area, last updated date, and classification.

2. Permission-aware filtering

Employees should only see answers they’re allowed to see. This requires role-based filtering at query time, not static snapshots.

3. Real-time freshness

Knowledge inside an enterprise is never static. Policies get updated, workflows shift, and product documentation evolves as teams release new features. An AI chatbot must reflect these changes the moment they occur. That requires continuous indexing, freshness checks, and permission-aware retrieval, not manual uploads or periodic syncs that quickly fall out of date. This is one of the clearest dividing lines between consumer chatbots and enterprise-ready systems: the latter deliver answers based on current organizational truth, not a frozen snapshot of it.

Deterministic Execution: the missing piece

Most legacy chatbots failed not because they misunderstood questions, but because they couldn’t take action. Today’s AI chatbots need a deterministic execution engine that:

- Calls APIs reliably

- Creates and updates tickets in Jira/ServiceNow

- Triggers approval workflows

- Provisions access or starts HR tasks

- Posts updates to Slack/Teams channels

- Verifies each step with success/failure checks

Because determinism matters.

LLMs can recommend a next step, but actions must be:

- Predictable

- Auditable

- Reversible

- Logged

- Governed

This separates “AI assistant” from “AI agent.” Without deterministic execution, enterprises risk silent failures, inconsistent results, and compliance gaps.

Enjo’s approach (AI Flows) falls exactly in this category: LLM for reasoning, deterministic workflows for accuracy.

Conversation state, context windows & identity

A chatbot must track who is asking, what they asked before, and which systems they are allowed to access.

This requires:

1. Identity mapping

Slack/Teams identity ↔ internal directory ↔ permissions

This ensures correct access (e.g., finance policies for finance staff only).

2. State management

Multi-turn conversations require maintaining session memory:

- User intent

- Selected device or ticket

- Unresolved sub-steps

- Workflow progress

Without state, every message feels like starting from scratch.

3. Context window management

LLMs have token limits. A robust chatbot keeps the active conversation small but relevant—discarding noise, keeping key variables.

4. Channel-aware adaptation

- Website sessions are short.

- Slack sessions are threaded.

- Teams sessions may involve group channels.

The chatbot must behave differently on each surface.

Security stack: SSO, RBAC, audit logs, encryption

Enterprises require more than “secure by default.” They need specific controls:

1. Single Sign-On (SSO)

Integration with Okta, Azure AD, or Google Workspace ensures identity consistency across surfaces. Enjo, for example, supports Okta SSO with native session management.

2. Role-Based Access Control (RBAC)

Different roles need different access rights:

- General employees

- IT admins

- HR leaders

- workflow creators

Fine-grained RBAC prevents accidental exposure of sensitive information.

3. Audit logs

Every action must be recorded:

- Retrieval queries

- Workflow executions

- Ticket actions

- Escalations

- Admin changes

Auditability builds trust and supports compliance reviews.

4. Encryption

Data must encrypt:

- At rest

- In transit

- Across private-link/VPC deployments (where available)

5. Governance workflows

Configuration changes should follow approvals and logging. Enterprise adoption depends on proving the chatbot can operate without creating risk.

Enjo in Practice: Enjo’s architecture was built around enterprise controls: Okta SSO, granular RBAC, full audit trails, and encrypted data flows. This makes Slack/Teams-native deployment viable for large enterprises with strict governance needs.

Channels Where AI Chatbots Operate

AI chatbots don’t live in a single interface anymore. They operate as a distributed service layer across multiple surfaces, each with its own interaction model, expectations, and constraints. The most successful deployments recognize that users behave differently on a public website than they do in Slack or Teams and the chatbot must adapt accordingly.

The goal across all channels is the same: reduce friction, provide clarity, and route work into structured workflows. But the execution should feel channel-native, not generic.

Website Chatbot for Customers

A website chatbot serves two audiences simultaneously: users who want fast answers and teams who want cleaner funnels and fewer repetitive tickets. Its job is to:

- Interpret diverse customer intents

- Deliver precise answers with minimal dialogue

- Capture qualified leads without interrupting the experience

- Deflect routine support queries before they hit the helpdesk

- Escalate complex issues with complete context

Customer sessions are brief and transactional, often under 30 seconds. This places pressure on the chatbot to respond quickly, avoid unnecessary clarifications, and maintain a consistent tone aligned with brand trust.

What distinguishes effective website chatbots:

They combine retrieval (docs, FAQs, pricing) with routing logic (“book demo,” “start trial,” “connect to support”). They also integrate event tracking so teams can measure what questions drive conversions, where users drop off, and which flows should be refined.

For implementation details: Website Chatbot: Setup, Integrations & Real-World Use Cases

Slack/Teams Agent for employees

Inside an enterprise, the collaboration platform is the real support front door. Employees already escalate issues, share screenshots, and request approvals in Slack or Teams. A well-designed chatbot steps directly into this workflow rather than forcing users to switch into a portal.

A channel-native Slack/Teams agent should:

- Retrieve internal knowledge with permission awareness

- Create and update tickets inline

- Run multi-step actions (reset access, trigger approvals)

- Maintain conversation state across threaded discussions

- Adapt its responses to the culture and pace of internal chat

The biggest value is behavioral: employees stop context-switching. Instead of abandoning a task to “check a portal,” they continue working in the same channel where the problem originated. This is where internal adoption spikes and governance complexity increases, identity mapping, RBAC, and audit logs become essential.

Deeper exploration: AI Chatbot for IT & Internal Support (Slack/Teams)

Multi-channel knowledge hub

Most enterprises have knowledge sprawl:

- Historical docs in Confluence

- Updated policies in SharePoint

- Onboarding content in Notion

- Customer docs in the CMS

- Tribal knowledge buried in Slack threads

A modern AI chatbot acts as a knowledge unification layer. It indexes content across systems, filters based on permissions, and returns a single authoritative answer regardless of where the user interacts website, Slack, Teams, or embedded widget. The impact goes beyond Q&A.

A unified retrieval layer eliminates the silent failure mode of giving different answers to different audiences. For example:

- A customer asking a pricing question on the website

- An employee asking about discount eligibility in Slack

The outputs must be consistent. This improves compliance, reduces internal confusion, and reinforces a predictable support experience.

Why Channel-Native behavior matters

Enterprises often fail with chatbots because they deploy a “one-size-fits-all” widget in places where the interaction pattern doesn’t fit. Channel-native behavior solves this mismatch.

On a website

Users want concise answers, minimal friction, and a clear next step.

A good website chatbot:

- Summarizes

- Avoids nested clarifying questions

- Provides safety rails for complex requests

- Routes users to the right CTA without sounding pushy

In Slack or Teams

Employees expect collaboration, threading, and persistence.

A good internal agent:

- Keeps state

- References earlier messages

- Runs commands inside the thread

- Adapts to the conversational style of the workspace

Within workflow execution

Deterministic logic matters more than personality.

A workflow engine:

- Verifies each step

- Logs actions for audits

- Handles retries

- Ensures correctness over creativity

Channel-native behavior is not optional; it’s foundational to trust and usability. Enterprises don’t want “a chatbot.” They want a consistent support interface that behaves intelligently in each place people work.

Enjo in Practice: Enjo uses one intelligence layer across all surfaces. The website chatbot, Slack/Teams agent, and workflow engine share the same retrieval, controls, and action execution, eliminating fragmentation and maintaining answer consistency.

Top Enterprise Use Cases Across IT, HR & Support

AI chatbots add the most value when they resolve work, not merely interpret text. Below are the use cases where enterprises consistently see impact at scale.

Self-service policy → Answer retrieval

Policy questions appear simple but consume enormous operational capacity. Employees ask variations of the same 30–40 topics, VPN access, leave policies, procurement rules, device allowances, security protocols.

A high-precision chatbot:

- Identifies the exact policy segment

- Applies permission filters

- Returns a clean, context-aware answer

- Links to authoritative documentation

- Tracks low-confidence responses for review

This reduces unnecessary escalation, raises policy adherence, and provides a measurable audit trail for compliance teams.

Ticket creation and updates

Ticketing is one of the most expensive support bottlenecks. Employees dislike portals, which means ticket data is often incomplete or delayed.

An AI chatbot solves this by turning conversation into structured work:

- Captures intent (“my VPN is failing again”)

- Attaches screenshots or logs

- Selects the correct Jira/ServiceNow issue type

- Enriches metadata automatically

- Updates the ticket as troubleshooting progresses

- Closes the ticket when resolved

What once required five steps in a portal becomes one message in chat.

HR queries, onboarding, and approvals

HR teams face repeated questions on payroll, benefits, onboarding, and documents. A chatbot reduces load and standardizes responses.

Examples:

- “What’s our parental leave policy?”

- “Where do I find my Form 16?”

- “What are the onboarding tasks for Day 1?”

For approval-heavy flows (hardware, allowances, onboarding), the chatbot orchestrates steps:

- Collect data

- Request approval

- Notify stakeholders

- Update HRIS or IT systems

HR teams regain hours each week, and employees experience more predictable support.

Troubleshooting and device support

Troubleshooting works best when a chatbot combines reasoning with deterministic actions.

Typical conversational paths:

- Understand the issue (“VPN reconnect loops”)

- Suggest verified steps (flush DNS, reset profile, check certs)

- Collect logs or device metadata

- Trigger backend checks or workflows

- Escalate with pre-filled technical context

This cuts resolution time because the chatbot handles the early diagnostic steps that L1 agents traditionally manage.

Website lead capture and customer FAQs

For customer-facing teams, the chatbot becomes a conversion tool:

- Interprets buying intent

- Answers advanced product, integration, and pricing questions

- Distinguishes between support vs sales queries

- Captures lead info

- Routes qualified users to demo or trial flows

- Logs patterns that inform product and documentation improvements

A strong website chatbot reduces friction in the buying journey while lowering the burden on customer service and sales teams.

How AI Chatbots Integrate Into Support Workflows

An AI chatbot becomes operationally valuable only when it integrates into the tools and systems that already anchor IT, HR, and customer support workflows. It should not behave like a parallel support channel. Instead, it must behave like a workflow orchestrator that interprets conversational intent, retrieves authoritative content, and executes structured actions across multiple systems. Modern enterprises converge around three integration categories: knowledge repositories, ticketing systems, and workflow engines.

Each one plays a distinct role in turning conversation into actionable, traceable work.

This integrated stack transforms the chatbot from a Q&A tool into a support interface that employees trust.

Multi-step flows (Agentic Behaviour)

Agentic behavior is often misunderstood as “Autonomous AI.”

Enterprise-grade agentic behavior is much more practical: the chatbot decomposes a request into steps, executes them in order, verifies outcomes, and maintains a complete audit trail.

A well-governed agentic flow has characteristics such as:

- Clear preconditions (“user must exist in HRIS”)

- Deterministic transitions (“if approved → provision access”)

- Built-in error recovery (“retry API call,” “fallback to human escalation”)

- User-facing transparency (“Step 3 completed → waiting on approver”)

This model replaces legacy processes where a single access request triggers a week of back-and-forth messages across email and tickets.

Decision Framework

Automation must follow predictable rules. Handoffs must cover ambiguity or risk.

This structured approach prevents “over-automation,” one of the most common deployment failures.

Governance and accuracy tuning

Accuracy doesn’t remain stable on its own. Enterprise chatbots require continuous governance to maintain reliability across evolving policies, workflows, and integration dependencies.

Instead of long lists, here’s a more useful operational view:

Want to test how an AI chatbot performs inside Slack or Teams with real tickets and workflows: Start a free Enjo trial — deploy in minutes.

FAQ

1. What is an AI chatbot?

An AI chatbot is a system that interprets natural language, retrieves information from connected knowledge sources, and performs structured actions. Modern enterprise chatbots combine LLM reasoning, retrieval, and workflow execution so they can resolve tasks (ticket creation, approvals, troubleshooting) across tools like Jira, ServiceNow, Confluence, and SharePoint. They operate across multiple surfaces such as websites, Slack, and Microsoft Teams.

2. Which AI chatbot is best for enterprise support?

The best AI chatbot depends on your environment and use case. For support workflows, the most effective systems integrate deeply with collaboration tools (Slack/Teams), support ticketing platforms (Jira/ServiceNow), and knowledge bases (Confluence/SharePoint). Strong governance, RBAC, audit trails, and deterministic workflows matter more than model size. Enjo fits this category with channel-native behavior and enterprise controls.

3. Is there any free AI chatbot I can try?

Most enterprise-grade solutions offer a free tier, trial, or pilot period to evaluate retrieval accuracy, action reliability, and workflow execution. Enjo provides a free trial so teams can test real flows inside Slack/Teams without engineering setup: Signup today

4. What can an AI chatbot automate in IT, HR, or customer support?

AI chatbots automate predictable, rule-driven tasks such as policy answers, ticket triage, device troubleshooting, onboarding steps, access provisioning, and multi-step approval flows. They also serve customer-facing needs like product FAQs, pricing questions, and lead qualification. Tasks requiring human judgment or ambiguous interpretation should escalate to human teams.

5. How do I deploy an AI chatbot securely?

Secure deployment requires SSO integration (e.g., Okta), RBAC to control access, encrypted data flows, and full audit logs of retrieval and actions. Enterprises should also review content freshness, workflow success rate, and permission mapping monthly to prevent drift or incorrect responses.

6. How do I measure whether my AI chatbot is performing well?

The most reliable indicators are:

- Retrieval precision (correctness of sourced answers)

- Resolution rate (issues solved end-to-end)

- Action success rate (workflow reliability)

- Deflection rate (reduction in tickets)

- Governance metrics (fallback accuracy, escalation quality)

7. Do I need to train or fine-tune a model?

Not usually. Modern chatbots rely on retrieval and deterministic workflows rather than model training. Connecting knowledge sources, implementing clear triage rules, and defining workflow logic deliver more accuracy than fine-tuning. Enterprises refine content, not models.

Accelerate support with Generative AI

Stay Informed and Inspired